Measuring Email Performance

Mike Afergan & Robert Beverly

Introduction

Although the use and importance of Internet email has increased significantly over the past twenty years, email delivery is still performed via the largely unchanged Simple Mail Transfer Protocol (SMTP) [1]. While the general perception of email is that it "just works," surprisingly little data is available to substantiate this claim. This is particularly troubling as we witness the system come under increased strain.

This research seeks to provide a greater understanding of the behavior of Internet email as a system using active measurement. As such, our results are some of the first to examine the performance of the preeminent example of a large store-and-forward network. Store-and-forward communication is an architectural mainstay in designing networks where connectivity and end-to-end paths are transient. By better understanding Internet protocols which lack explicit end-to-end connection semantics, our eventual hope is to derive guidelines for designing future networks and more reliable email.

Approach

We seek a set of tools applicable across a wide variety of systems and implementations. There are many popular Mail Transfer Agents (MTAs), each configurable to suit different installations. In addition to software and configuration heterogeneity, individual SMTP servers process vastly different mail loads and have differing network connectivity. To capture this diversity, our goal is to measure email paths, errors, latency and loss to a significant, diverse, and representative set of Internet SMTP servers. However, we do not have email accounts on, or administrative access to, these servers. Thus, we devised an email "traceroute" methodology that relies on bounce-backs.

The methodology is based on eliciting and recording bounce-back emails. Traditional email etiquette calls for servers to inform users of errors, for instance unknown recipient or undeliverable mail notifications. These descriptive errors, known as bounce-backs, are returned to the originator of the message. Unfortunately, issues such as load or dictionary attacks lead some administrators to configure their servers to silently discard badly addressed email. However, we find that approximately 25% of the domains tested in this survey reply with bounce-backs. This provides us with a population from which we can produce a sufficiently representative cross-section of servers and organizations.

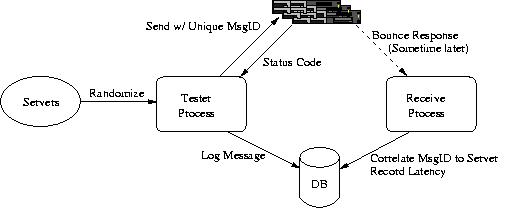

The testing system is depicted above. Each email is sent to a randomly selected (and with very high probability invalid) unique recipient. When and if the bounce returns, this unique address allows us to disambiguate received bounces and later to calculate the appropriate statistics. All data is stored in a database to facilitate easy calculations.

Our analysis to date has focused on a dataset captured in September 2004. For the entire month, we ran traces every 15 minutes to 1,468 servers in 571 domains. The first set of domains include the members of the Fortune 500. Their servers are likely indicative of robust, fault-tolerant SMTP systems. The second set of domains are randomly chosen. To generate these random domains, we select random legitimate IP addresses from a routing table and perform a reverse DNS lookup. We truncate DNS responses to the organization's root name to form the list. A third set of domains, ``Top Bits'' are those that source a large amount of web traffic as seen by examining the logs of a cache in an ISP.

Testing these domains scientifically requires removing various levels of non-determinism. These arise from message routing, load balancing and redundancy mechanisms built into DNS. We introduce a pre-processing step to remove this non-determinism. Each domain is resolved into the complete set of MX records, inclusive of all servers regardless of their preference value. Each MX record is further resolved into the corresponding set of IP addresses. Thus, the atomic unit of testing is the IP address of a server supporting a domain rather than the domain itself.

A round of testing consists of sending a message to every server found in the pre-processing step. Our system chooses, per server and round, a unique random 10 character alphanumeric string as the recipient of the message. It then connects to that IP address and sends the message. The message body is always the same and designed to be innocuous to pass any inbound filtering. The message explains the study and provides an opt-out link. For example, consider a domain example.com which has primary and secondary mail servers with IP addresses 1.2.3.4 and 5.6.7.8 respectively. The tester will connect to 1.2.3.4 and send its message addressed to randstring1@example.com. It then connects to 5.6.7.8 and sends the message addressed to randstring2@example.com. Upon receipt of bounce-backs, the tester can easily determine which bounce corresponds to which originating message based on the unique random string. We performed extensive testing to ensure the testing system could handle a receive load an order of magnitude higher than expected without introducing bias.

Using bounces is a novel and unique way to test a wide variety of domains and gain insight into the behavior of Internet email as a system using active measurement. However, it has a potential limitation of which we are cognizant: whether the behavior seen through bounces, by definition errant mail, is representative of real email. For example, a server could handle bounces differently than normal emails at different periods of time. Depending on load, bounces might be placed in a different queue or simply dropped.

While there is concern over the validity of the bounce methodology, we find no direct evidence to show that it is invalid. We are unaware of any system or software that handles bounces differently. Further, we are unable to find direct evidence in our data to support the theory that bounces are handled differently. For example, we examine the pattern of loss to see if it is correlated with peak traffic hours, perhaps indicating a lower priority for bounces when systems are under load. We do not see this. We also examine greeting banners (the HELO message) to determine if the single IP of a mail exchanger actually represents multiple virtual machines with different configurations. While we find different banners for the same IP address, the existence of such an architecture does not correlate with or seem to explain the losses. Thus, while our system is not perfect, we hope it represents a step forward in understanding a large, complex system such as email.

Results

For our September 2004 dataset, we analyzed three metrics: loss, latency and errors. The raw anonymized data collected in this study is publicly available. The results, which are presented in more detail in our paper [2], are interesting and surprising. In particular we see:

- Significant Latency: We find many servers with periods of high latency, often correlated within a domain -- and several emails with latencies measured in days.

- Significant and Surprising Errors: These include a large number of "primary" MXes that are not reachable or non-responsive. These do not necessarily imply that users will experience errors, but are indicative of the operational state of the system.

- Non-trivial loss rates: For example, we find many servers which experience periods of bursty loss. That is, throughout the month, they respond to all bounces except for a few periods where they for example respond to a fraction significantly less than 100% yet significantly more than 0%. Further, these strange behaviors seem to be correlated among servers. We do not fully understand this behavior and are investigating several possible explanations.

References

[1] J. Klensin. Simple Mail Transfer Protocol. Internet Engineering Task Force, RFC 2821, April 2001.

[2] M. Afergan and R. Beverly. The State of the Email Address. ACM SIGCOMM Computer Communications Review, Measuring the Internet's Vital Statistics (CCR- IVS), Volume 35, Number 1: January 2005.

The Stata Center, Building 32 - 32 Vassar Street - Cambridge, MA 02139 - USA tel:+1-617-253-0073 - publications@csail.mit.edu (Note: On July 1, 2003, the AI Lab and LCS merged to form CSAIL.) |