Discovering Objects and Their Localization In Images

J. Sivic, B. Russell, A. Efros, A. Zisserman & W. Freeman

The Problem

We seek to discover the object categories depicted in a set of unlabelled images. With this, we demonstrate classification of new images and of images containing multiple objects. Furthermore, we provide an approximate segmentation of each object category from the others within an image.

Motivation

Common approaches to object recognition involve some form of supervision. This may range from specifying the object's location and segmentation, as in face detection [2,3], to providing only auxiliary data indicating the object's identity [4,5,6,7]. For a large dataset, any annotation is expensive, or may introduce unforeseen biases. Results in speech recognition and machine translation highlight the importance of huge amounts of training data. The quantity of good, unsupervised training data -- the set of still images -- is orders of magnitude larger than the visual data available with annotation. Thus, one would like to observe many images and infer models for the classes of visual objects contained within them without supervision. This motivates the scientific question which, to our knowledge, has not been convincingly answered before: Is it possible to learn visual object classes simply from looking at images?

Approach:

We discover object categories by using models developed in the statistical text literature: probabilistic Latent Semantic Analysis (pLSA) [8,9] and Latent Dirichlet Allocation (LDA) [10]. In text analysis this is used to discover topics in a corpus using the bag-of-words document representation. Here we treat object categories as topics, so that an image containing instances of several categories is modeled as a mixture of topics.

The model is applied to images by using a visual analogue of a word, formed by vector quantizing SIFT-like region descriptors [11]. The topic discovery approach successfully translates to the visual domain: for a small set of objects, we show that both the object categories and their approximate spatial layout are found without supervision.

Results:

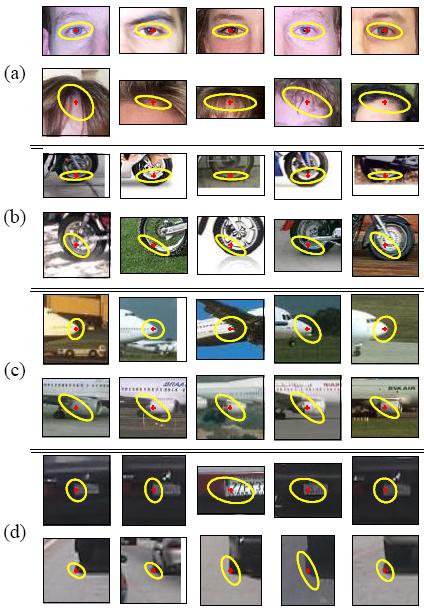

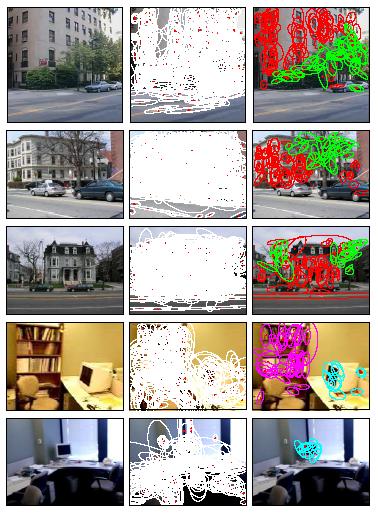

We used the Caltech 101 [12] and MIT scenes and objects [13] datasets to test our approach. Figure 1 shows the most likely visual words that were discovered in an experiment involving four object categories from the Caltech 101 dataset. Figure 2 shows approximate segmentations for four (out of ten) discovered object categories on the MIT scenes and objects dataset.

For more details, please refer to [1].

Figure 1: Two most likely words (shown by 5 examples in a row) for four learnt topics: (a) Faces, (b) Motorbikes, (c) Airplanes, (d) Cars.

Figure 2: Example segmentations on the MIT dataset: (left column) the original images; (middle column) all detected regions superimposed; (right column) the topic induced segmentation. The following is a rough description of the colored regions: red - buildings; green - trees; cyan - computer monitors; magenta - bookcases.

Research Support:

This work was sponsored in part by the EU Project CogViSys, the University of Oxford, Shell Oil (grant #6896597), and the National Geospatial-Intelligence Agency (grant #6896949).

References

[1] J. Sivic, B. C. Russell, A. A. Efros, A. Zisserman, W. T. Freeman. Discovering object categories in image collections. MIT AI Lab Memo AIM-2005-005, February, 2005.

[2] H. Schneiderman and T. Kanade. A statistical method for 3D object detection applied to faces and cars. In Proc. ICCV, 2003.

[3] P. Viola and M. Jones. Rapid object detection using a boosted cascade of simple features. In Proc. CVPR, 2001.

[4] K. Barnard, P. Duygulu, N. de Freitas, D. Forsyth, D. Blei, and M. Jordan. Matching words and pictures. Journal of Machine Learning Research, 3:1107-1135, 2003.

[5] P. Duygulu, K. Barnard, J.F.G. de Freitas, and D. Forsyth. Object recognition as machine translation: Learning a lexicon for a fixed image vocabulary. In Proc. ECCV, 2002.

[6] R. Fergus, P. Perona, and A. Zisserman. Object class recognition by unsupervised scale-invariant learning. In Proc. CVPR, 2003.

[7] M. Weber, M. Welling, and P. Perona. Unsupervised learning of models for recognition. In Proc. ECCV, pages 18-32, 2000.

[8] T. Hofmann. Probabilistic latent semantic indexing. In SIGIR, 1999.

[9] T. Hofmann. Unsupervised learning by probabilistic latent semantic analysis. Machine Learning, 43:177-196, 2001.

[10] D. Blei, A. Ng, and M. Jordan. Latent Dirichlet allocation. Journal of Machine Learning Research, 3:993-1022, 2003.

[11] D. Lowe. Object recognition from local scale-invariant features. In Proc. ICCV, pages 1150-1157, 1999.

[12] L. Fei-Fei, R. Fergus, and P. Perona. A Bayesian approach to unsupervised one-shot learning of object categories. In Proc. ICCV, 2003.

[13] A. Torralba, K.P. Murphy, and W.T. Freeman. Contextual models for object detection using boosted random fields. In NIPS '04, 2004.

The Stata Center, Building 32 - 32 Vassar Street - Cambridge, MA 02139 - USA tel:+1-617-253-0073 - publications@csail.mit.edu (Note: On July 1, 2003, the AI Lab and LCS merged to form CSAIL.) |