Matching Interest Points Using Affine Invariant Concentric Circles

Han-Pang Chiu & Tomas Lozano-Perez

What

We present a new method to perform reliable matching between different images. This method finds complete region correspondences between concentric circles and the corresponding projected ellipses centered on interest points. It matches interest points exploiting all the available luminance information in the regions under affine transformation.

Why

A prominent approach to image matching has consisted of identifying "interest points" in the images, finding photometric descriptors of the regions surrounding these points and then matching these descriptors across images. But systems that rely on matching interest points across images using local descriptors typically employ some set of additional filters to "verify" the putative matches based on local descriptors. We want to explore an approach to matching interest points that finds complete region correspondences under an affine transformation and that exploits all the available luminance information in the regions. And the method is a computational compromise between the local descriptor comparison and full region registration methods that search over transformation parameters.

How

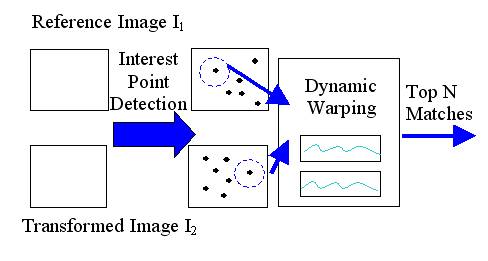

Our approach aims to find an explicit correspondence for all the pixels in the regions around a pair of interest points, see Figure 1. We match a sequence of concentric circles in one image to a sequence of embedded ellipses in the other image that satisfy the conditions required for an affine transformation. The radius of the matched circles is increased until a sudden change in the intensity difference is detected, which usually signals occlusion.

Figure 1: The matching process.

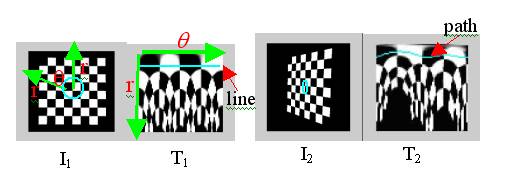

First we convert the images centered on each candidate pair of interest points from Cartesian coordinates (X, Y) into polar coordinate (r, q). The problem of matching concentric circles in the reference image and the corresponding projected ellipses in the transformed image becomes that of computing color difference cost between the line in the polar sampled reference image and the path in the polar sampled transformed image, generated by the affine transformation equation, as shown in Figure 2.

Figure 2

In our proposed matching procedure, we first use a dynamic programming technique to match small regions and obtain the image warping. Then it can efficiently match the growing circular and elliptical regions based on the parameters of the affine transformation equation. It can also detect the sudden change in intensity difference during the process of matching, for occlusion detection. The best N matched points with lowest average cost are returned.

Progress

Experiments have been conducted on many different data sets to compare this approach to matching using two SIFT-based local descriptors [1, 2], which perform best among existing local descriptors. To make the comparison fair, all three methods use the same input set of "interest points" obtained by one recent affine invariant detector proposed by Mikolajczyk and Schmid [3]. The results show our method is more effective in natural scenes without distinctive texture patterns. It also offers increased robustness to partial visibility, object rotation in depth, and viewpoint angle change.

Research Support

This material is based upon work supported by the Defense Advanced Research

Projects Agency (DARPA), through the Department of the Interior, NBC,

Acquisition Services Division, under Contract No. NBCHD030010. Additional

support was provided by the Sinpapore-MIT Alliance.

References

[1] D. Lowe, “Object recognition from local scale-invariant features”, ICCV, 1150-1157, 1999.

[2] Yan Ke and Rahul Sukthankar, “PCA-SIFT: A more distinctive representation for local image descriptors”, CVPR, 506-503, 2004.

[3] K. Mikolajczyk and C. Schmid, “An affine invariant interest point detector”, ECCV, 128-142, 2002.

The Stata Center, Building 32 - 32 Vassar Street - Cambridge, MA 02139 - USA tel:+1-617-253-0073 - publications@csail.mit.edu (Note: On July 1, 2003, the AI Lab and LCS merged to form CSAIL.) |