Eclat: Automatic Generation and Classification of Test Inputs

Carlos Pacheco & Michael Ernst

The Problem

Suppose we have a working program and a test suite for it. How can we augment the test suite with new, valuable tests? Typically, this is done manually. The programmer thinks hard for a while, and comes up with new inputs to the program that cover untested behavior. Our goal is to provideautomated support to a programmer faced with this task.

The Elat Approach

To help a programmer write new tests, we have developed a technique [3] that creates new test inputs with the following properties:

- They reveal errors.

- They are not covered by the existing test suite.

- They are non-redundant.

We have implemented our technique in Eclat, a tool that creates new unit tests for Java programs. The tool is publicly available:

As an example of the technique and the Eclat tool, suppose the class MyStack.java implements a stack data structure (Figure 1). The class has a small test suite MyStackTest.java that we wish to improve. Eclat analyzes the class and the test suite, and it outputs a set of test inputs (uses of the stack) deemed potentially problematic, which differ from the behavior covered by the original test suite. For this example, Eclat creates an input that pops an empty stack and results in the stack pointer becoming negative, putting the stack in an illegal state. Eclat also creates an input that calls the equals method with null as its argument, resulting in a exception. These are problematic behaviors not explored by the original test suite.

|

| Figure 1 |

Technique

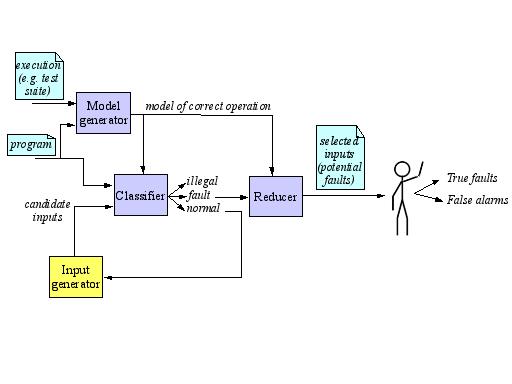

The technique (Figure 2) requires a concrete execution of the program (e.g. an exiting test suite). It works as follows.

- Observe the program's behavior on the provided correct executions, and create a model of correct behavior. For the stack example, the model might contain observations such as "the stack index should never be negative" or "the method equals should not throw an exception."

- Do n times:

- Randomly generate a set of candidate inputs. These are sequences of calls of the interfaces defined in the program.

- Classify the candidate inputs, based on their adherence to the

model derived in step 1. The classification step assigns one of

three labels to each candidate:

- Illegal: the program is not required to handle the input.

- Normal operation: the input makes the program behave normally.

- Fault-revealing: the input is legal, and the program behaves badly.

- Throw away illegal inputs, save fault-revealing inputs, and use normal inputs to create longer sequences of method calls.

- Choose a subset of the inputs labeled fault-revealing. Choose inputs that cover different behavior of the class.

- Present the chosen subset to the user.

|

| Figure 2: The Technique. |

Evaluation

To evaluate the effectiveness of our technique in creating inputs that reveal errors in program, we used Eclat on 47 classes, several of which had multiple versions (totalling 568 implementations of the classes). All errors discovered by Eclat were real errors inadvertently introduced by the authors of the program. For this set of programs, Eclat generated on average 1415 inputs per class, and it reported on average 1.5 inputs as fault-revealing. Of these, 0.55 were indeed faults-revealing, i.e. caused program behavior that pointed to an error in the implementation. The remaining 0.95 inputs were false positives. In other words, approximately 1 in 3 inputs shown to the user were truly fault-revealing.

Example Fault-revealing Inputs

To illustrate the inputs that Eclat reports, and the errors it uncovers, below are two inputs reported. The first input tests a class that implements polynomials (in Java).

| |

public void test_1_integrate() throws Exception {

ps1.RatPoly var6002 = new ps1.RatPoly(4, 3);

ps1.RatNum var17045 = var59.coeff(5);

// The following method call violates properties

// that were true in the execution provided to Eclat:

//

// on exit: var6002.terms[].coeff elements all != 0

ps1.RatPoly var43163 = var6002.integrate(var17045);

// Programmer TODO: Replace this call by the correct assertion.

junit.framework.Assert.assertTrue(false);

}

|

The first two lines in method test_1_integrate() create a polynomial and query part of its state. The next method call causes problematic behavior, which Eclat outputs as a comment. For this input, the call of method integrate results in the polynomial having a term with zero coefficient--something that never occurred in the original test suite. Further examination reveals that in fact, method integrate erroneously adds an unnecessary zero-term coefficient in some cases.

The second example is an erroneous implementation of a method in a utility library. The method create_combinations(int sz, List list) creates all combinations of size sz of the elements in list. The test suite for this class does not test for combinations of size 0. Eclat reports that this input throws an exception in this case, which reveals that the method incorrectly handles combinations of size 0.

| |

public void test_4_create_combinations() throws Exception {

java.util.ArrayList var0 = new java.util.ArrayList();

var0.add(0, 1);

var0.add(0, 2);

var0.add(0, 3);

java.util.List var9326 = UtilMDE.sortList(var0, null);

// Execution of the following method throws

// java.lang.StackOverflowError.

java.util.List var32674 =

UtilMDE.create_combinations(0, var9326);

// Programmer TODO: Replace this call by the correct assertion.

junit.framework.Assert.assertTrue(false);

}

|

Future Work

Future work on this research centers around two themes.

- Input generation. Our technique for generating candidate inputs is a simple bottom-up random generator. We would like to explore other technique to generate candidate inputs, such as exhaustive generation within a small domain.

- Input classification. Several researchers have used machine learning to classify program executions as either correct or faulty [1, 2, 4]. We would like to apply such techniques.

References

[1] J. F. Bowring, J. M. Rehg, and M. J. Harrold. Active learning for automatic classification of software behavior. In ISSTA, pages 195-205, July 2004.

[2] Y. Brun and M. D. Ernst. Finding latent code errors via machine learning over program executions. In ICSE, pages 480-490, May 2004.

[3] C. Pacheco and M.D. Ernst. Eclat: Automatic generation and classification of test inputs. ECOOP 2005 --- Object-Oriented Programming, 19th European Conference, (Glasgow, Scotland), July 25-29, 2005.

[4] A. Podgurski, D. Leon, P. Francis, W. Masri, M. Minch, J. Sun, and B. Wang. Automated support for classifying software failure reports. In ICSE, pages 465-475, May 2003.

The Stata Center, Building 32 - 32 Vassar Street - Cambridge, MA 02139 - USA tel:+1-617-253-0073 - publications@csail.mit.edu (Note: On July 1, 2003, the AI Lab and LCS merged to form CSAIL.) |