Improving Texture and Object Recognition in a Natural Scene-Understanding Environment

Ethan Meyers, Stanley Bileschi & Lior Wolf

The Problem

The goal of the Street Scenes project is to create algorithms that can learn to simultaneously classify multiple diverse objects in complex visual environments. Previous approaches to this problem have either focused solely on local feature information for object classification [1,2,3], or have additionally incorporated contextual information to [4,5]. While the results of object and region classification produced by these methods have been promising, they still fall far short of human capabilities. The current goal of this project is to investigate new methods so as to close the gap between human and computer performance.

To address the problem of learning to classify objects and regions in complex environments, a training database of images of street scenes was created in which the locations of various regions of interest (cars, trees, buildings, etc.) have been hand-labeled. The first stage of our algorithm segments these images into smaller regions using the “Edison” system [6] and also extracts regions that are found via an object detection algorithm. Biologically inspired C2 features [7] are then extracted from these regions and each region is classified using boosting. While the results of our current system are have been outperforming pervious systems, several flaws still remain, such as pieces of trees being found in the middle of buildings and other contextually obvious misclassified results.

|

|

Figure 1: a) An overview of the first stage of our system. b) Examples of building regions misclassified as trees

Approach

To correct these misclassified regions, we are experimenting with second stage algorithms that do spatial smoothing. These algorithms convert images into weighted graphs, with two different types of weighted edges: edges connecting adjacent pixels/regions and edges between pixels/regions and their corresponding labels (source/sink nodes). Graph-cuts can then be used to produce more homogeneous classifications over local areas [8]. The weights between adjacent pixels can either be uniform, or can be learned from local area relationships in our training images (e.g., if a gray road regions are never next to blue sky regions in our training sets, then weights between adjacent blue and gray pixels should be given a lower weight to try to induce a cut between such pixels). The weights between pixels/regions and their corresponding labels are based on the strength of the outputs from our first stage classifiers. Such methods should reduce many of the errors produced by our current system.

Preliminary Results

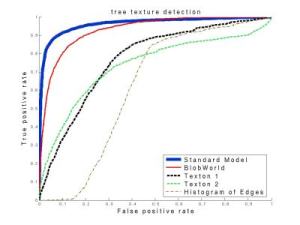

Results of our system without the contextual smoothing stage already outperform similar systems. Results from the additional contextual stage algorithms are not yet available, but will only improve on the current performance.

|

|

|

|

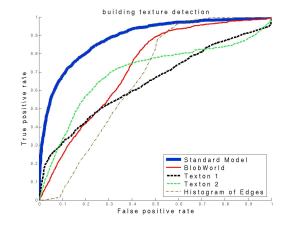

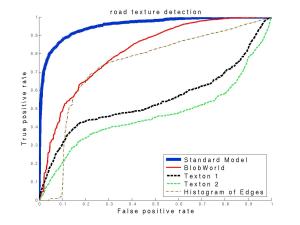

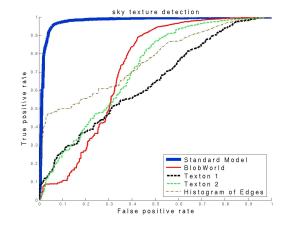

Figure 2: ROC curves comparing our c2 based algorithm (blue) to blob world (red) textons (black and green) and edge histograms (brown); a) buildings b) roads c) streets d) trees

Acknowledgments

This report describes research done at the Center for Biological & Computational Learning, which is in the McGovern Institute for Brain Research at MIT, as well as in the Dept. of Brain & Cognitive Sciences, and which is affiliated with the Computer Sciences & Artificial Intelligence Laboratory (CSAIL).

This research was sponsored by grants from: Office of Naval Research (DARPA) Contract No. MDA972-04-1-0037, Office of Naval Research (DARPA) Contract No. N00014-02-1-0915, National Science Foundation (ITR/SYS) Contract No. IIS-0112991, National Science Foundation (ITR) Contract No. IIS-0209289, National Science Foundation-NIH (CRCNS) Contract No. EIA-0218693, National Science Foundation-NIH (CRCNS) Contract No. EIA-0218506, and National Institutes of Health (Conte) Contract No. 1 P20 MH66239-01A1.

Additional support was provided by: Central Research Institute of Electric Power Industry (CRIEPI), Daimler-Chrysler AG, Compaq/Digital Equipment Corporation, Eastman Kodak Company, Honda R&D Co., Ltd., Industrial Technology Research Institute (ITRI), Komatsu Ltd., Eugene McDermott Foundation, Merrill-Lynch, NEC Fund, Oxygen, Siemens Corporate Research, Inc., Sony, Sumitomo Metal Industries, and Toyota Motor Corporation.

References

[1] C. Papageorgiou, and T. Poggio. A Trainable System for Object Detection. In IJCV, pages 15-33, 2000.

[2] R. Fergus, P. Perona, and A. Zisserman. Object class recognition by unsupervised scale-invariant learning. In CVPR, volumne 2, pages 254-271, 2003.

[3] H. Schneiderman, and T. Kanade. Object Detection Using the Statistics of Parts. In International Journal of Computer Vision, 2002.

[4] A. Torralba K. Murphy, W. Freeman and M Rubin. Context-Based Vision System for Place and Object Recognition. In AI Memo 2003-005, March 2003.

[5] P. Carbonetto, N. de Freitas, and K. Barnard. A Statistical Model for General Contextual Object Recognition. In European Conference on Computer Vision, 2004.

[6] C. M. Christoudias, B. Georgescu, and P. Meer. Synergism in low level vision.. In ICCV, Vol. IV, pp. 150-155, August 2002.

[7] T. Serre, L. Wolf, and T. Poggio. A New Biologically Motivated Framework for Robust Object Recognition. In AI Memo 2004-026, November 2004.

[8] Y. Boykov and V. Kolmogorov. An Experimental Comparison of Min-Cut/Max-Flow Algorithms for Energy Minimization in Vision. In IEEE Transactions on PAMI, Vol. 26, Number 9, pp. 1124-11373, September 2004.

The Stata Center, Building 32 - 32 Vassar Street - Cambridge, MA 02139 - USA tel:+1-617-253-0073 - publications@csail.mit.edu (Note: On July 1, 2003, the AI Lab and LCS merged to form CSAIL.) |