The IRIS Project: Scalable, Robust, Peer-to-Peer Systems

Frank Dabek, M. Frans Kaashoek, David Karger, Jinyang Li, Robert Morris, Emil Sit & Jeremy Stribling

Introduction

The Internet has demonstrated the power of a global naming and communications infrastructure: each new application need not implement its own network, but can simply assume a shared global communication infrastructure. The Infrastructure for Resilient Internet Systems (IRIS) project is developing a novel decentralized infrastructure, based on distributed hash tables (DHTs), that will enable a new generation of large-scale distributed applications. DHTs are robust in the face of failures, attacks and unexpectedly high loads. They are scalable, achieving large system sizes without incurring undue overhead. They are self-configuring, automatically incorporating new nodes without manual intervention or oversight. They provide a simple and flexible interface and are simultaneously usable by many applications.

Distributed hash tables provide two key functions. First, they allow nodes to agree upon and efficiently locate a rendezvous point for a given object without central coordination — this is done using protocols such as Chord and Accordion. Our recent research has sought to build real implementations of these protocols and to ensure that they efficiently use available (but constrained) resources. Second, DHTs provide a simple storage interface, allowing applications to put a data object and then get the object at a later time using a key provided by the DHT. We have focused on providing robustness and performance for data stored in this manner, by careful placement and management of object replicas. Our implementation of this is called DHash.

In addition, we are building applications that take advantage of this infrastructure to provide benefits and features that would otherwise be difficult to achieve. Among these are a low-overhead Usenet server and a distributed and cooperative research library.

Robust Distributed Hash Table Lookups

DHTs maintain routing tables used when forwarding lookups. A node's routing table consists of a set of neighbor entries, each of which contains the IP address and DHT identifier of some other node. A DHT node must maintain its routing table, both populating it initially and ensuring that the neighbors it refers to are still alive.

An application developer wishing to use a DHT must choose a protocol from the wide spectrum of possible DHT performance options. An O(1) protocol might work well early in the deployment of an application, when the number of nodes is small, but could generate too much maintenance traffic as the application becomes popular or if churn increases. Starting with an O(log n) protocol would result in unnecessarily low performance on small networks or if churn turns out to be low. While the developer can manually tune a O(log n) protocol to increase the size of its routing table, such tuning is difficult and workload-dependent.

Accordion [3] is a new DHT design that automatically tunes parameters such as routing table size in order to achieve the best performance. Accordion has a single parameter, a network bandwidth budget, that allows control over the consumption of the resource that is most constrained for typical users. Given the budget, Accordion adapts its behavior across a wide range of network sizes and churn rates to provide low-latency lookups. The problems that Accordion must solve are how to arrive at the best routing table size in light of the budget and the stability of the node population, how to choose the most effective neighbors to place in the routing table, and how to divide the maintenance budget between acquiring new neighbors and checking the liveness of existing neighbors.

Accordion solves these problems in a unique way. Unlike other protocols, it is not based on a particular data structure such as a hypercube or de Bruijn graph that constrains the number and choice of neighbors. Instead, each node learns of new neighbors as a side-effect of ordinary lookups, but selects them so that the density of its neighbors is inversely proportional to their distance in ID space from the node. This distribution allows Accordion to vary the table size along a continuum while still providing the same worst-case guarantees as traditional O(log n) protocols. A node's bandwidth budget determines the rate at which a node learns. Each node limits its routing table size by evicting neighbors that it judges likely to have failed: those which have been up for only a short time or have not been heard from for a long time. Therefore, high churn leads to a high eviction rate. The equilibrium between the learning and eviction processes determines the table size.

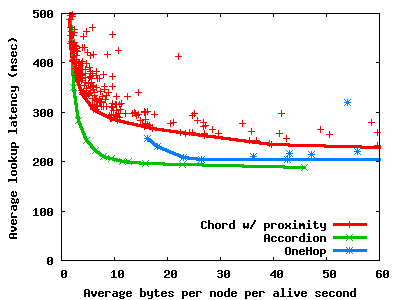

We evaluate Accordion in the performance vs. cost (PVC) framework [4]. When bandwidth is plentiful, Accordion provides lookup latencies and maintenance overhead similar to that of OneHop. When bandwidth is scarce, Accordion has lower lookup latency and less maintenance overhead than Chord, even when Chord incorporates proximity and has been tuned for the specific workload.

For example, Figure 1 shows the lookup latency vs. bandwidth overhead tradeoffs of Accordion compared to that of Chord and OneHop. The simulated network consists of 3000 nodes that join and leave the system with a pareto distributed lifetime of median 1 hour. The average round trip time is 180ms and each node issues a lookup for a random key with mean 10 minutes. The convex hull segments for Chord and OneHop outline their best latency/bandwidth tradeoff curves found by systematically exploring Chord and OneHop's parameter space. For Accordion, the bandwidth budget is varied to trace its latency/bandwidth tradeoff curve; no parameter exploration is necessary.

Maintaining Data under churn in DHash

DHash is a system for providing block storage, built using the Chord lookup protocol to organize nodes and data. DHash aims to provide efficient reads and writes, data integrity and data availability. That is, it seeks to be usable on a day-to-day basis. Early work [1] defined the basic interface and addressed the problem of data integrity by relating the lookup key to the stored data using a cryptographic hash function or public key signature. Subsequent work [2] focused on reducing lookup latency and achieving high throughput.

Our current work tackles the problem of maintaining the durability and availability of data. There is great potential for this, due to the large number of distributed participants and their contributed storage and bandwidth resources. If these resources could be efficiently harnessed, DHash could provide robustness and a high storage capacity. Unfortunately, this problem is made difficult by unpredictable frequent node failures — DHash must have an intelligent strategy for managing replicas.

Replication is traditionally used to ensure that data remains both available (reachable on the network) and durable (stored intact, but perhaps not reachable). Availability is a harder goal than durability: availability may be threatened by any network, processor, software, or disk failure, while durability is usually only threatened by disk failures. Small-scale fault-tolerant storage systems such as RAID provide availability and durability relatively inexpensively since failures are rare and plenty of local network or bus bandwidth is available to create new replicas. In a large distributed system, failures become more common and available bandwidth becomes more expensive. Further, it is difficult to distinguish between temporary failures (that do not affect durability) and permanent failures (that do).

To handle failures efficiently, we have developed a model for durability based on the rate of permanent replica failure and the time required to create new replicas over a limited-capacity network. The model predicts how many replicas are required to ensure durability, and guides decisions about how to maintain the replicas and where to place them. This model has helped us develop an algorithm that implicitly creates enough extra replicas to maintain availability while minimizing subsequent repairs due to temporary failures. This algorithm reduces the amount of replication traffic compared to existing techniques that create a set amount of extra redundancy at the outset.

Applications: UsenetDHT

UsenetDHT [5] is a DHT-based replacement for the Usenet messaging system. UsenetDHT presents the existing Usenet interface to clients but reduces storage and bandwidth costs by storing articles in a DHT rather than replicating them at each server. In UsenetDHT, the bandwidth required to support read and writes for the full Usenet feed is around 200KB/s; additionally, simulations suggest that to maintain the availability of this data in a relatively stable environment is on the order of 1-2Mbps. By contrast, a traditional Usenet server must dedicate around 20MB/s to carry a full feed.

Applications: OverCite

OverCite [6] is a proposal for a new architecture for a distributed and cooperative research library based on a DHT. OverCite provides the same services as CiteSeer on a distributed set of nodes, eliminating the need for a single institution to contribute all of the resources necessary to run CiteSeer; the resource and bandwidth requirement of each participating node is significantly reduced. The DHT's role as a distributed storage layer, coupled with its robust and scalable models for data management and peer communication, allows the decentralization of the CiteSeer infrastructure and the inclusion of additional CPU and storage resources. Besides serving as a distributed, robust archive of data, the DHT simplifies the coordination of distributed activities, such as crawling. Finally, the DHT acts as a rendezvous point for producers and consumers of meta-data and documents.

References

[1] Frank Dabek, M. Frans Kaashoek, David Karger, Robert Morris, and Ion Stoica. Wide-area cooperative storage with CFS. In Proc. of the 18th ACM Symposium on Operating SYstem Princiles (SOSP '01), October 2001.

[2] Frank Dabek, Jinyang Li, Emil Sit, James Robertson, Frans Kaashoek, and Robert Morris. Designing a DHT for low latency and high throughput. In Proc. of the 1st Symposium on Networked System Design and Implementation (NSDI '04), March 2004.

[3] Jinyang Li, Jeremy Stribling, Robert Morris and M. Frans Kaashoek. Bandwidth-efficient management of DHT routing tables. In The Proceedings of the 2nd Symposium on Networked System Design and Implementation (NSDI'05) May 2005

[4] Jinyang Li, Jeremy Stribling, Robert Morris, M. Frans Kaashoek and Thomer M. Gil. A Performance vs. Cost Framework for Evaluating DHT Design Tradeoffs under Churn. In Proceedings of the 24th IEEE Infocom Mar 2005.

[5] Emil Sit, Frank Dabek, and James Robertson. UsenetDHT: A Low Overhead Usenet Server In Proceedings of the 3rd International Workshop on Peer-to-Peer Systems (IPTPS04) Feb 2004

[6] Jeremy Stribling, Isaac G. Councill, Jinyang Li, M. Frans Kaashoek, David R. Karger, Robert Morris, and Scott Shenker. OverCite: A Cooperative Digital Research Library. In Proceedings of the 4th International Workshop on Peer-to-Peer Systems (IPTPS '05) Feb 2005

The Stata Center, Building 32 - 32 Vassar Street - Cambridge, MA 02139 - USA tel:+1-617-253-0073 - publications@csail.mit.edu (Note: On July 1, 2003, the AI Lab and LCS merged to form CSAIL.) |