Image and Shape Matching with Distributions of Local Features

Kristen Grauman & Trevor Darrell

Abstract

Sets of local features that are invariant to common image transformations are an effective representation to use when comparing images; current methods typically judge feature sets' similarity via a voting scheme (which ignores co-occurrence statistics) or by comparing histograms over a set of prototypes (which must be found by clustering). We present a method for efficiently comparing images based on their discrete distributions (bags) of distinctive local invariant features, without clustering descriptors. Similarity between images is measured with an approximation of the Earth Mover's Distance (EMD), which quickly computes minimal-cost correspondences between two bags of features. Each image's feature distribution is mapped into a normed space with a low-distortion embedding of EMD. Examples most similar to a novel query image are retrieved in time sublinear in the number of examples via approximate nearest neighbor search in the embedded space. We evaluate our method with scene, object, and texture recognition tasks.

Summary of Problem and Approach

Image matching, or comparing images in order to obtain a measure of their similarity, is an important computer vision problem with a variety of applications, such as content-based image retrieval, object and scene recognition, texture classification, and video data mining. The task of identifying similar objects and scenes within a database of images remains challenging due to viewpoint or lighting changes, deformations, and partial occlusions that may exist across different examples. Global image statistics such as color histograms or responses to filter banks have limited utility in these real-world scenarios, and often cannot give adequate descriptions of an image's local structures and discriminating features. Instead, researchers have recently turned to representations based on local features that can be reliably detected (for example, using a Harris or SIFT [4] interest operator) and are invariant to the transformations likely to occur across images, such as photometric or various geometric transformations.

A number of recent matching techniques extract invariant local features for all images, and then use voting to rank the database images in similarity: the query image's features vote independently for features from the database images (where votes go to the most similar feature under some distance), possibly followed by a verification step to account for spatial or geometric relationships between the features [5,4,6,7]. When sufficiently salient features are present in an image, matching methods based on the independent voting scheme may successfully identify good matches in the database. However, using a query image's features to independently index into the database ignores useful information that is captured by the co-occurrence of a set of distinctive features -- information that is especially important when categorization of objects or textures is the goal -- and it fails to distinguish between images having varying numbers of similar features.

Other matching approaches have taken feature co-occurrences into account by using vector quantization to represent each image by its frequency of prototypical feature occurrences, then comparing the weighted histograms with a bin-by-bin distance measure [8,9]. However, while mapping detected features to a set of global prototypes may make matching the distribution of features more efficient, such approaches assume that the space of features that will be encountered in novel images is known a priori when generating the prototypes, and they face the difficulty of properly choosing the number of cluster centers to use. Moreover, it has been shown that bin-by-bin measures (e.g., L_p distance, normalized scalar product) are less robust than cross-bin measures (e.g., the Earth Mover's Distance (EMD), which allows features from different bins to be matched) for capturing perceptual dissimilarity between distributions [10]. Methods that cluster features on a per-example basis still must choose a quantization level and risk losing discrimination power when that level is too coarse.

To address these issues, we propose a technique that compares images by matching their distributions of local invariant features. We also show how spatial neighborhood constraints may be incorporated directly into the matching process by augmenting features with invariant descriptions of their geometric relationship with other features in the image. We measure similarity between two discrete feature distributions with an approximation of EMD -- the measure of the amount of work necessary to transform one weighted point set into another. To match efficiently, we use a low-distortion embedding of EMD into a normed space which reduces a complex, correspondence-based distance to a simple, efficiently computable norm over very sparse vectors. The EMD embedding also enables the use of approximate nearest neighbor (NN) search techniques that guarantee query times that are sublinear in the number of examples to be searched [11,12].

We demonstrate our method in four very different contexts: recognition of scenes, objects, textures, and contour shapes. We show the advantage of using the joint statistics when matching with local features as opposed to matching each feature independently under a voting scheme, and we investigate the benefits of matching the actual detected features as opposed to vector-quantized versions of them. See [1] and [2] for the details of this approach and quantitative results comparing it to other methods.

|

|

|

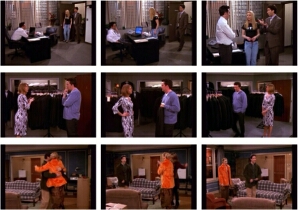

| Some

example retrievals using the proposed method to match query images

to databases of scenes (left), objects (center), and textures (right).

Each row shows query as leftmost image, followed by its nearest

neighbors in rank order. See [1] for details. |

||

Research Support

This project was supported in part by a Computational Science Graduate Fellowship from the Department of Energy.

References

[1] K. Grauman and T. Darrell. Efficient Image Matching with Distributions of Local Invariant Features. To appear, Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, San Diego, CA, USA, June 2005.

[2] K. Grauman and T. Darrell. Fast Contour Matching Using Approximate Earth Mover's Distance. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 220-227, Washington DC, June 2004.

[3] T. Yeh, K. Grauman, K. Tollmar, and T. Darrell. A Picture is Worth a Thousand Keywords: Image-Based Object Search on a Mobile Platform. In Proceedings CHI 2005: Conference on Human Factors in Computing Systems, April 2005.

[4] D. Lowe. Distinctive Image Features from Scale-Invariant Keypoints. International Journal of Computer Vision, 60(2):91-110, Jan 2004.

[5] K. Mikolajczyk and C. Schmid. Indexing Based on Scale-Invariant Interest Points. In Proceedings of the International Conference on Computer Vision, Vancouver, Canada, July 2001.

[6] T. Tuytelaars and L. V. Gool. Content-based Image Retrieval Based on Local Affinely Invariant Regions. In Proceedings of the 3rd International Conference on Visual Information Systems, Amsterdam, the Netherlands, June 1999.

[7] F. Shaffalitzky and A. Zisserman. Automated Scene Matching in Movies. In Proceedings on the Challenge of Image and Video Retrieval, London, U.K., July 2002.

[8] J. Sivic and A. Zisserman. Video Google: A Text Retrieval Approach to Object Matching in Videos. In Proceedings International Conference on Computer Vision, Nice, France, Oct 2003.

[9] G. Csurka, C. Dance, L. Fan, J. Willamowski, and C. Bray. Visual Categorization with Bags of Keypoints. In Proceedings of the European Conference on Computer Vision, Prague, Czech Republic, May 2004.

[10] Y. Rubner, C. Tomasi, and L. Guibas. The Earth Mover's Distance as a Metric for Image Retrieval. International Journal of Computer Vision, 40(2):99-121, Nov 2000.

[11] P. Indyk and N. Thaper. Fast Image Retrieval via Embeddings. In 3rd International Workshop on Statistical and Computational Theories of Vision, Nice, France, Oct 2003.

[12] A. Gionis, P. Indyk, and R. Motwani. Similarity Search in High Dimensions via Hashing. In Proceedings of the 25th International Conference on Very Large Data Bases, Edinburgh, Scotland, Sept 1999.

The Stata Center, Building 32 - 32 Vassar Street - Cambridge, MA 02139 - USA tel:+1-617-253-0073 - publications@csail.mit.edu (Note: On July 1, 2003, the AI Lab and LCS merged to form CSAIL.) |