Pyramid Match Kernels: Discriminative Classification with Sets of Image Features

Kristen Grauman & Trevor Darrell

Abstract

Discriminative learning is challenging when examples are sets of local image features, and the sets vary in cardinality and lack any sort of meaningful ordering. Kernel-based classification methods can learn complex decision boundaries, but a kernel similarity measure for unordered set inputs must somehow solve for correspondences -- generally a computationally expensive task that becomes impractical for large set sizes. We present a new fast kernel function which maps unordered feature sets to multi-resolution histograms and computes a weighted histogram intersection in this space. This "pyramid match" computation is linear in the number of features, and it implicitly finds correspondences based on the finest resolution histogram cell where a matched pair first appears. Since the kernel does not penalize the presence of extra features, it is robust to clutter. We show the kernel function is positive-definite, making it valid for use in learning algorithms whose optimal solutions are guaranteed only for Mercer kernels. We demonstrate our algorithm on object recognition tasks and show it to be dramatically faster than current approaches.

Summary of Problem and Approach

A variety of representations used in computer vision consist of unordered sets of features, where each set varies in cardinality, and the correspondence between the features across each set is unknown. For instance, an image may be described by a set of detected local affine-invariant regions, a shape may be described by a set of local descriptors defined at each edge point, or a person's face may be represented by a set of patches with different facial parts. In such cases, one set of feature vectors denotes a single instance of a particular class of interest (an object, scene, shape, face, etc.), and it is expected that the number of features will vary across examples due to viewpoint changes, occlusions, or inconsistent detections by the interest operator.

To perform learning tasks like categorization or recognition with such representations is challenging. While generative methods have had some success, kernel-based discriminative methods are known to represent complex decision boundaries very efficiently and generalize well to unseen data. For example, the Support Vector Machine (SVM) is a widely used approach to discriminative classification that finds the optimal separating hyperplane between two classes. Kernel functions, which measure similarity between inputs, introduce non-linearities to the decision functions; the kernel non-linearly maps two examples from the input space to the inner product in some feature space. However, conventional kernel-based algorithms are designed to operate on fixed-length vector inputs, where each vector entry corresponds to a particular global attribute for that instance; the commonly used general-purpose kernels defined on R^n inputs (e.g., Gaussian RBF, polynomial) are not applicable in the space of vector sets.

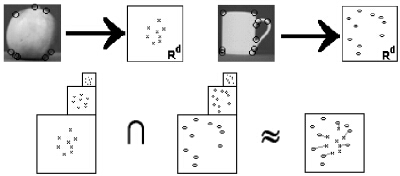

In this work we propose a pyramid match kernel -- a new kernel function over unordered feature sets that allows such sets to be used effectively and efficiently in kernel-based learning methods. Each feature set is mapped to a multi-resolution histogram that preserves the individual features' distinctness at the finest level. The histogram pyramids are then compared using a weighted histogram intersection computation, which we show defines an implicit correspondence based on the finest resolution histogram cell where a matched pair first appears.

The similarity measured by the pyramid match approximates the similarity measured by the optimal correspondences between feature sets of unequal cardinality (i.e., the partial matching that optimally maps points in the lower cardinality set to some subset of the points in the larger set, such that the summed similarities between matched points is maximal). Our kernel is extremely efficient and can be computed in time that is linear in the sets' cardinality. We show that our kernel function is positive-definite, meaning that it is appropriate to use with learning methods that guarantee convergence to a unique optimum only for positive-definite kernels (e.g., SVMs).

Because it does not penalize the presence of superfluous data points in either input set, the proposed kernel is robust to clutter. As we will show, this translates into the ability to handle unsegmented training examples and test examples with varying backgrounds or occlusions. The kernel also respects the co-occurrence relations inherent in the input sets: rather than matching features in a set individually, ignoring potential dependencies conveyed by features within one set, our similarity measure captures the features' joint statistics.

Other approaches to this problem have recently been proposed [2,3,4,5,6,7], but unfortunately each of these techniques suffers from some number of the following drawbacks: computational complexities that make large feature set sizes infeasible; limitations to parametric distributions which may not adequately describe the data; kernels that are not positive-definite (do not guarantee unique solutions for an SVM); limitations to sets of equal size; and failure to account for dependencies within feature sets.

Our method successfully addresses all of these issues, resulting in a kernel appropriate for comparing unordered, variable length feature sets within any existing kernel-based learning paradigm. We demonstrate our algorithm with object recognition tasks and show that its accuracy is comparable to current approaches, while requiring at least an order of magnitude less computation time. See [1] for details and results.

Research Support

This project was supported in part by a Computational Science Graduate Fellowship from the Department of Energy.

References

[1] K. Grauman and T. Darrell. Pyramid Match Kernels: Discriminative Classification with Sets of Image Features. MIT CSAIL Technical Report, AI Memo AIM-2005-007, Cambridge, MA, USA, March 2005.

[2] C. Wallraven, B. Caputo, and A. Graf. Recognition with Local Features: the Kernel Recipe. In Proceedings of the IEEE Conference on Computer Vision, Nice, France, Oct 2003.

[3] S. Lyu. Mercer Kernels for Object Recognition with Local Features. To appear, Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, San Diego, CA, USA, June 2005.

[4] S. Boughhorbel, J-P. Tarel, and F. Fleuret. Non-Mercer Kernels for SVM Object Recognition. In Proceedings of the British Machine Vision Conference, London, U.K., Sept 2004.

[5] R. Kondor and T. Jebara. A Kernel Between Sets of Vectors. In Proceedings of the International Conference on Machine Learning, Washington DC, USA, Aug 2003.

[6] L. Wolf and A. Shashua. Learning Over Sets Using Kernel Principal Angles. In Journal of Machine Learning Research, pp. 913-931, Dec 2003.

[7] P. Moreno, P. Ho, and N. Vasconcelos. A Kullback-Leibler Divergence Based Kernel for SVM Classification in Multimedia Applications. InAdvances in Neural Information Processing, Vancouver, Canada, Dec 2003.

The Stata Center, Building 32 - 32 Vassar Street - Cambridge, MA 02139 - USA tel:+1-617-253-0073 - publications@csail.mit.edu (Note: On July 1, 2003, the AI Lab and LCS merged to form CSAIL.) |