Imitation Learning of Whole-Body Grasps

Kaijen Hsiao & Tomas Lozano-Perez

What

We are creating a system for using imitation learning to teach a robot to grasp objects using both hand and whole-body grasps, which use the arms and torso as well as hands.

Why

Humans often learn to manipulate objects by observing other people. In much the same way, robots can use imitation learning to pick up useful skills. When trying to give our robots the full range of object manipulation abilities that humans possess, we would like to give them the ability to manipulate objects using not only their hands, but other body parts as well. Also, by trying to create a robot that can learn to use arbitrary surfaces of its arms and torso to pick up objects, we are forced to create a framework that could potentially be generalizable to more complex manipulation tasks than just grasping.

How

Demonstration grasp trajectories are created by teleoperating a simulated robot to pick up simulated objects, and stored as sequences of keyframes in which contacts with the object are gained or lost. When presented with a new object, the system compares it against the objects in a stored database to pick a demonstrated grasp used on a similar object. In our somewhat simplified implementation, both objects are modeled as a combination of primitives—boxes, cylinders, and spheres—and the primitives for each object are grouped into 'functional groups' that geometrically match parts of the new object with similar parts of the demonstration object. These functional groups are then used to map contact points from the demonstration object to the new object, and the resulting adapted keyframes are adjusted and checked for feasibility. Finally, a trajectory is found that moves among the keyframes in the adapted grasp sequence, and the full trajectory is tested for feasibility by executing it in the simulation.

Progress

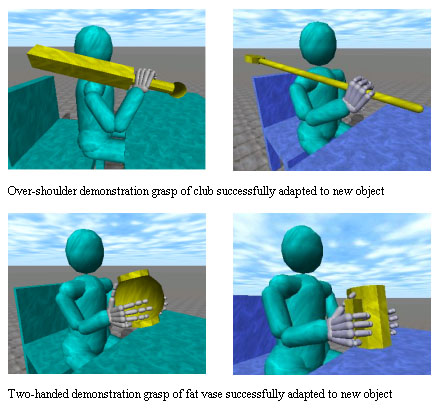

Currently, the system successfully uses this method to pick up 92 out of 100 randomly generated test objects (made of up to three boxes, cylinders, and spheres in a row) in simulation. Sample adapted grasps are shown in Figure 1.

Figure 1: Example

Demonstration and Adapted Grasps

Research Support

This research is primarily supported by a NSF Graduate Research Fellowship.

The Stata Center, Building 32 - 32 Vassar Street - Cambridge, MA 02139 - USA tel:+1-617-253-0073 - publications@csail.mit.edu (Note: On July 1, 2003, the AI Lab and LCS merged to form CSAIL.) |