Video-based 6-DOF Pose Determination from Known Structure

Olivier Koch & Seth Teller

Introduction

Most robotics applications require the system to be able to localize itself in its environment. In the real life, this task is often made more difficult by the fact that the topology of the environment is unknown, thus creating a double problem commonly known as SLAM (Simultaneous Mapping and Localization, [1]). SLAM algorithms therefore attempt to reconstruct the world structure and the camera path simultaneously.

In our approach, a fairly accurate as-built 3D model of the environment is assumed and we try to solve for the 6-DOF camera pose only - that is, a 3D translation vector and a 3D rotation vector. We use a high-resolution omnidirectonial vision system such as the LadyBug ([3]) to handle situations with high occlusions.

A Statistical Approach

Our approach consists in matching the features observed on the flow of images of the camera against the real 3D world features. Edges, rather than vertices, are good candidates for such features since they are more reliable for camera alignment and less likely to be occluded. Clearly, a brute-force method consisting in matching the observed edges against the whole set of possible edges in the 3D model would be unfeasible in real-time. In addition, such a system would be very sensitive to clutter and dynamic changes. The idea is to reconstruct a basic 3D model of the world from a small sequence of images. This model is then matched against the real model using a statistical tool such as RANSAC ([2]).

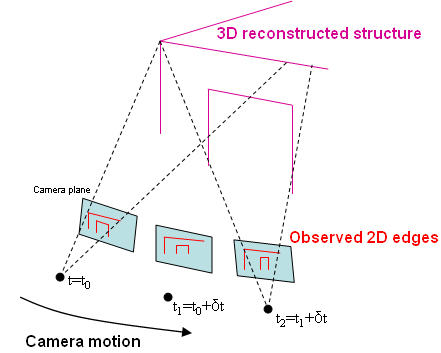

Figure 1: Reconstruction of a 3D model from a sequence of 2D edges

Visibility Computation

In order to allow real-time performance, the 3D model is pre-processed offline. We have developed an openGL-based method for computing the set of visible edges from a series of predefined nodes in the world. For each node, a 3D signature is then stored in a lookup table that handles high-paced requests from the system in online mode. The major benefit of this design is that the amount of data required for camera localization is constant and does not depend on the size of the whole model.

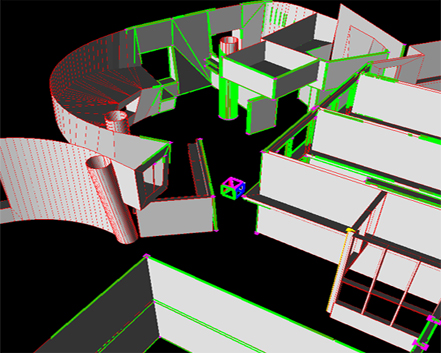

Figure 2: Visibility field in Stata 32-33x. The camera is represented as a box. Visible edges are displayed in green.

Node Jumping

At startup, the system determines the set of possible nodes corresponding to its observations. It is actually possible that there be several options across the building (for example, if the system is located in a hallway). In such a case, the system has to keep track of all possible options. However, as the camera moves across the building, the set of options should ultimately shrink to the only correct and consistent one matching all of the past observations. Once the system is locked on one node, the navigation task consists in jumping from node to node, using the lookup table as an indicator for the expected features.

We are currently working on defining a robust method for estimating the camera pose based on signature matching. Other items include building a real-time multi-threaded graphics user interface and capturing ground-truth sequences for system validation.

References

[1] Bosse, M., Newman, P., Leonard, J., and Teller, S. Slam in large-scale cyclic environments using the atlas framework, IJRR 2004.

[2] M. A. Fischler, R. C. Bolles. Random Sample Consensus: A Paradigm for Model Fitting with Applications to Image Analysis and Automated Cartography. Comm. of the ACM, Vol 24, pp 381-395, 1981.

[3] Ladybug Omnidirectional Camera System, User Guide v1.0, PointGrey Research, www.pointgrey.com

The Stata Center, Building 32 - 32 Vassar Street - Cambridge, MA 02139 - USA tel:+1-617-253-0073 - publications@csail.mit.edu (Note: On July 1, 2003, the AI Lab and LCS merged to form CSAIL.) |