Fighting Phishing at the User Interface

Min Wu & Robert Miller

Motivation

As people increasingly rely on the Internet to do business, Internet fraud becomes a greater and greater threat. Internet fraud uses misleading messages online to deceive users into forming a wrong belief and induce them to take dangerous actions that compromise their own or other people's welfare. The most prevalent kind of Internet fraud is phishing. Phishing uses emails and websites, which designed to look like emails and websites from legitimate organizations, to deceive users into disclosing their personal or financial information. The hostile party can then use this information for criminal purposes, such as identity theft and fraud. Users can be tricked into disclosing their information either by providing sensitive information via a web form or downloading and installing hostile codes, which search users' computers or monitoring users' online activities in order to get information.

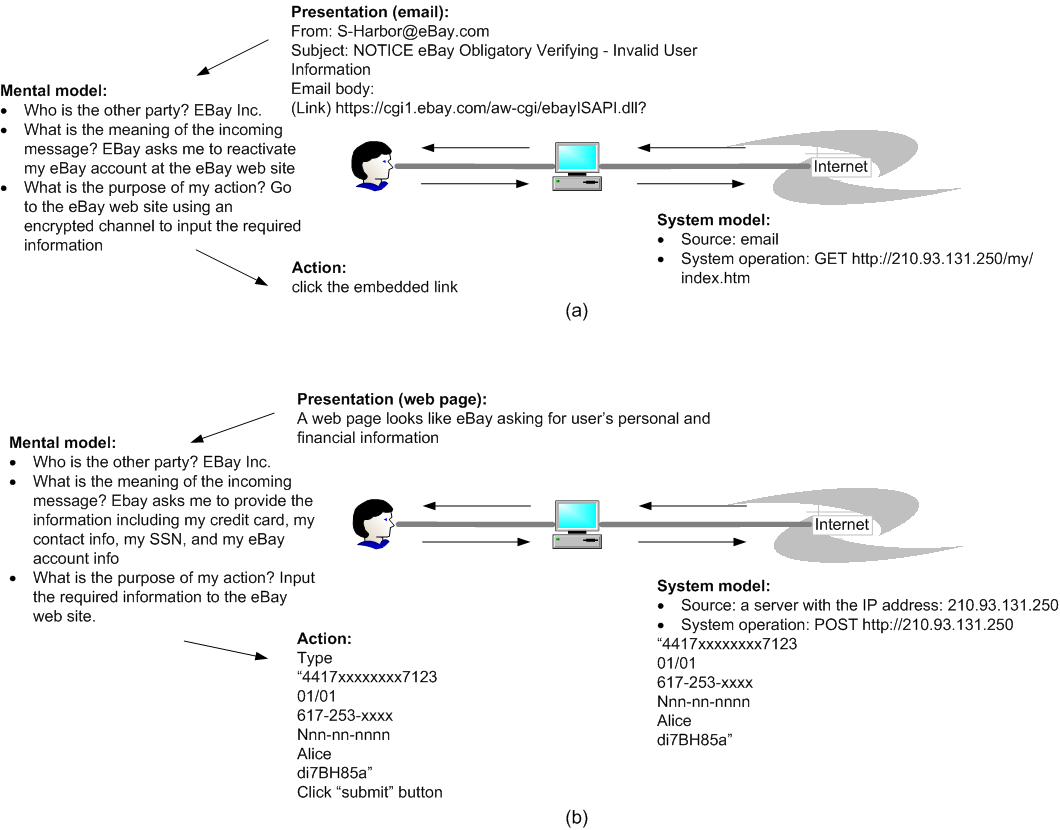

Phishing is a semantic attack. Successful phishing depends on a discrepancy between the way a user perceives a communication, like an email message or a web page, and the actual effect of the communication. The system model is concerned with how computers exchange bits --- protocols, representations, and software. When human users play a role in the communication, however, understanding and protecting the system model is not enough, since the real message communicated depends not on the bits exchanged but on the semantic meanings that are derived from the bits. This semantic layer is the user's mental model. The effectiveness of phishing indicates that human users do not always assign the proper semantic meaning to their online interactions. We have to shrink the gap between user's mental model and the system model.

Approach

We propose to use a detection and visualization chain to help keep users from being fooled by phishing messages.

Source Analysis

When a message arrives at the user's computer, we can subject it to source analysis. Just as the first step that a hacker takes when preparing to attack a system is footprinting (gathering information to construct a profile of the target), we can use the same idea in reverse to create a complete profile of the remote party's reputation. Possible data sources for this profile include WHOIS database, SSL certificate, catalog information from dmoz.org or Yahoo! Directory, link popularity and neighborhood from Google, and references from trusted third parties, like bbb.org or truste.org. The challenge in this part is how to automatically and efficiently retrieve, parse, and cross-validate information from different sources.

Presentation Cues

After the system obtains a profile of the remote server, the browser needs to present this information to the user. Many browser toolbars have been implemented that inform users directly or indirectly whether the current site is phishing or not. Examples include SpoofStick, Netcraft toolbar, Trustbar, eBay Account Guard, and SpoofGuard. The information displayed by these toolbars can be categorized into three types:

- Neutral information about the current website, such as the real domain name and domain information about when the domain was registered and where the domain is hosted;

- Positive information that can only distinguish secure web pages (or sites) from other pages (or sites);

- A system decision about how likely it is that the website is phishing.

We hypothesize that these toolbars do not effectively prevent phishing attacks, for three possible reasons:

- Users may not notice the indicators since their display is outside the user's locus of attention;

- Users may not care about the displayed information, since the information is about security, which is always a secondary goal when users have a job that needs to be done;

- Users may not understand or believe the indicators, since the Internet is well-known for heterogeneity -- in other words, even under safe circumstances, web sites display odd, inconsistent behavior and bugs -- and heuristic algorithms like spam filters are well-known for false positives.

We have done a user study to test the effectiveness of three abstract security toolbars, displaying neutral information, positive information, and system decisions, respectively. We found that if the website's appearance, which is totally controlled by the attacker, looks good or not risky, many users (more than 20%) do not take seriously or believe the message displayed by the toolbars about phishing attacks, regardless of which toolbars they are using.

As a result, we need a new way to display security information. Content-integrated visual cues are one possibility we are exploring. If the web content is the dominant factor guiding the user's decision, we can degrade the content if the system thinks that a site is questionable, using a watermark or blurring the page. Moreover, content-integrated visual cues can bring warnings directly to user's attention at the appropriate time, with enough space for detailed explanations.

Deriving Intentions from Actions

When the user performs some action, such as filling in a form on a web page, the system happily and perfectly encodes the user's action and sends it back to the remote server. But it does not attempt to derive the user's intention for this action. As a result, the system loses an opportunity to understand the user's mental model, and hence provide some additional security.

Since most current phishing attacks deceive users into submitting their personal data through web forms, monitoring and analyzing entries into a web form can identify the data type of user's input, whether it is a password, a credit card number, or simply an insensitive search term. Moreover, the user interface can be improved so that different intentions are tightly bound with different actions which can be distinguished by the system. For example, users might be able to type insensitive data into web forms, but sensitive data would require dragging and dropping a vCard, virtual credit card, or bank card onto the form.

If the system still fails to derive user's intention from his typing, it can always ask the user. Think about when you want to go to an organization's web site but you do not know the exact URL, what should you do? Google is a good choice for you to input the organization's name and hopefully the intended URL is displayed in the first 10 results. We propose to use the same idea here. When the browser cannot derive the user's intention, it can display a dialog box asking users: "where do you expect this information sent to?" Based on the user's answer, the browser can decide if it sends the answer as a Google search item directly or if the answer needs some pre-processing with common knowledge or context information before it is sent to Google. In this way, Google translates user's natural language to the URL that the system can understand. Finally, the system can use user's intention as a "semantic sandbox" to guide its operation.

Evaluation

The anti-phishing detection and visualization chain will be evaluated in two ways. The chain should effectively prevent users from being deceived by real phishing messages. We can evaluate the effectiveness through controlled user studies and field studies, using both known phishing attacks from APWG archives, and new phishing attacks with novel tricks invented by us.

On the other hand, the antiphishing chain should not disrupt user's normal online activities. We can evaluate the degree of disruption by measuring the error rate of the detection methods on legitimate but unreputed messages, the rate of distraction by the visual cues from the user's primary task, and the user's subjective satisfaction.

References

[1] Min Wu. "Fighting Phishing at the User Interface." PhD Thesis proposal, December 2004.

The Stata Center, Building 32 - 32 Vassar Street - Cambridge, MA 02139 - USA tel:+1-617-253-0073 - publications@csail.mit.edu (Note: On July 1, 2003, the AI Lab and LCS merged to form CSAIL.) |