Comparative Analysis of Decomposition Methods and Identification Algorithms for Emotion Recognition

James P. Skelley & Bernd Heisele

Intelligent man-machine-interfaces, which are capable of reacting to user facial expressions, have boosted computer vision research in the area of automated facial expression recognition. Using a learning-based approach, a classifier must be generated which can recognize many emotions from only a small training corpus, and do so regardless of the identity of the subjects. Image variations such as changes in illumination or changes in the pose of the subject complicate this process. Two methods exist which permit emotion recognition irregardless of identity and do so despite variations among images of the training corpus: Optical Flow Based Morphing Techniques and Techniques involving Multi-value Tensor Decomposition. In order to determine which more effectively serves the goals of emotion recognition, a comparison of their relative effectiveness will be made.

Six "played" emotions indicative of the widest range of possible expressions (neutral, happy, surprise, fear, sadness, disgust, anger) and six "natural" expressions (smile, laugh, shock, dislike, puzzlement, indescribable,), which are similarly dispersed form the dataset. These expressions were each taken from twelve subjects displaying a variation in physical appearance.

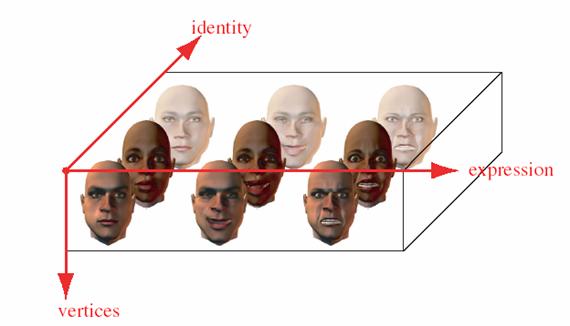

Raw images are processed before being trained and tested on by the respective algorithms[6]. As a baseline for comparison, a component-based system for facial identification[2] will first be used to perform emotion recognition. This component-based system will be trained on expression rather than on identities. The multi-value tensor decomposition method (Fig. 2)[3, 4], and optical flow method (Fig. 1) will then be compared to the component-based system.

Fig. 1. Optical Flow Design (Courtesy of[1])

Fig. 2. Ideal Value Decomposition (Courtesy[3])

![]()

Equation 1: Multi-value Tensor Decomposition Courtesy[4]

Equation 1 demonstrates how the algorithms described in[4] decompose a facial data image tensor (D) into a core tensor (Z), as well as N feature based mode matrices (the U's) using N-mode tensor decomposition. Ideally, this can be used to separate identity from emotion information as illustrated in Fig. 2. The image tensor will be affected by the manner in which optical flows are computed, be they computed relative to a single neutral image (as in Fig. 1) or relative to a set of neutral images (the third method to be explored).

At the completion of their construction, the systems will be compared and a quantitative comparison of their relative training methods and percentage of successful recognitions will be made.

Acknowledgments

This report describes research done at the Center for Biological & Computational Learning, which is in the McGovern Institute for Brain Research at MIT, as well as in the Dept. of Brain & Cognitive Sciences, and which is affiliated with the Computer Sciences & Artificial Intelligence Laboratory (CSAIL).

This research was sponsored by grants from: Office of Naval Research (DARPA) Contract No. MDA972-04-1-0037, Office of Naval Research (DARPA) Contract No. N00014-02-1-0915, National Science Foundation (ITR/SYS) Contract No. IIS-0112991, National Science Foundation (ITR) Contract No. IIS-0209289, National Science Foundation-NIH (CRCNS) Contract No. EIA-0218693,National Science Foundation-NIH (CRCNS) Contract No. EIA-0218506, and National Institutes of Health (Conte) Contract No. 1 P20 MH66239-01A1.

Additional support was provided by: Central Research Institute of Electric Power Industry (CRIEPI), Daimler-Chrysler AG, Compaq/Digital Equipment Corporation, Eastman Kodak Company, Honda R&D Co., Ltd., Industrial Technology Research Institute (ITRI), Komatsu Ltd., Eugene McDermott Foundation, Merrill-Lynch, NEC Fund, Oxygen, Siemens Corporate Research, Inc., Sony, Sumitomo Metal Industries, and Toyota Motor Corporation.

References

[1] B. Abboud, F. Davoine, Mo Dang. Facial Expression Recognition and Synthesis based on an Appearance Model. Signal Processing Image Communication, Volume 19, pages 723-740, 2004.

[2] B. Weyrauch, J. Huang, B. Heisele, and V. Blanz. Component-based Face Recognition with 3D Morphable Models. Firs IEEE Workshop on Face Processing in Video, Washington, D.C., 2004.

[3] J. Popovic, D. Vlasic. "Face Transfer with Multilinear Models." to appear in IEEE Conference for Computer Vision.

[4] M. Alex O. Vasilescu and Demetri Terzopoulos. MultiLinear Analysis of Image Ensembles: TensorFaces. In The Proceedings of the European Conference on Computer Vision, Copenhagen, Denmark, May 2002.

[5] M. Alex O. Vasilescu and Demetri Terzopoulos. MultiLinear Image Analysis for Facial Recognition. In The Proceedings of the International Conference on Pattern Recognition, Quebec, Canada, August 2002.

[6] R. Fischer, Automatic Facial Expression Analysis and Emotional Classification, Diploma thesis.

The Stata Center, Building 32 - 32 Vassar Street - Cambridge, MA 02139 - USA tel:+1-617-253-0073 - publications@csail.mit.edu (Note: On July 1, 2003, the AI Lab and LCS merged to form CSAIL.) |