Visual Hand Tracking Using Occlusion Compensated Message Passing

Erik B. Sudderth, Michael I. Mandel, William T. Freeman & Alan S. Willsky

Introduction and Motivation

Accurate visual detection and tracking of articulated objects is a challenging problem with applications in human-computer interfaces, motion capture, and scene understanding. We have developed probabilistic methods for tracking the three-dimensional (3D) motion of unmarked hands from image sequences [1,2]. Even coarse models of the hand's geometry have 26 continuous degrees of freedom, making brute force search over all possible 3D poses intractable. Although there are dependencies among the hand's joint angles, they have a complex structure which, except in special cases, is not well captured by simple global dimensionality reduction techniques.

Visual tracking problems are further complicated by the projections inherent in the imaging process. Videos of hand motion typically show a large amount of self-occlusion, in which some fingers partially obscure other parts of the hand. These situations make it difficult to locally match hand parts to image features, since the global hand pose determines which local edge and color cues should be expected for each finger. Furthermore, because the appearance of different fingers is typically very similar, accurate association of hand components to image cues is only possible through global geometric reasoning.

To overcome these difficulties, existing hand trackers typically use restricted models which severely limit the hand's motion [3-6]. We instead use a graphical model to specify the statistical structure underlying the hand's kinematics and imaging. Our model is based on a redundant local representation in which each hand component is described by its own 3D position and orientation. Because the hand's kinematic constraints take a simple form in this local representation, we may use an inference algorithm known as nonparametric belief propagation (NBP) [7] to develop a tracker which efficiently exploits this kinematic structure. Our tracker also uses a novel local decomposition of the likelihood function to properly handle self-occlusions in a distributed fashion.

For other work applying graphical models to visual tracking, see [8-11].

Geometric Model

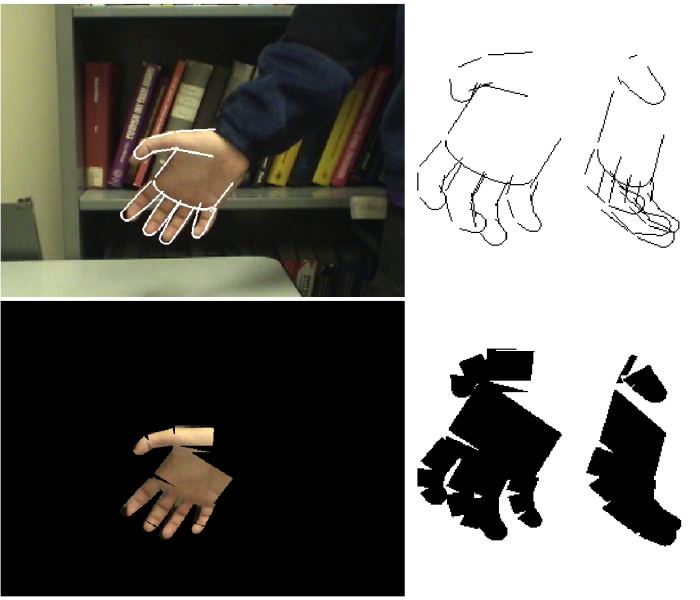

Structurally, the hand is composed of sixteen approximately rigid components: three links for each finger and thumb, as well as the palm. We model each rigid body by one or more truncated quadrics (ellipsoids, cones, and cylinders) of fixed size [6]. As the goal of this model is estimation, not visualization, we only model the coarse structural features which are most relevant for the tracking problem. The following image shows the edges (top) and silhouettes (bottom) for a grasping hand pose, viewed both in the original image and after rotation by 35 and 70 degrees about the vertical axis:

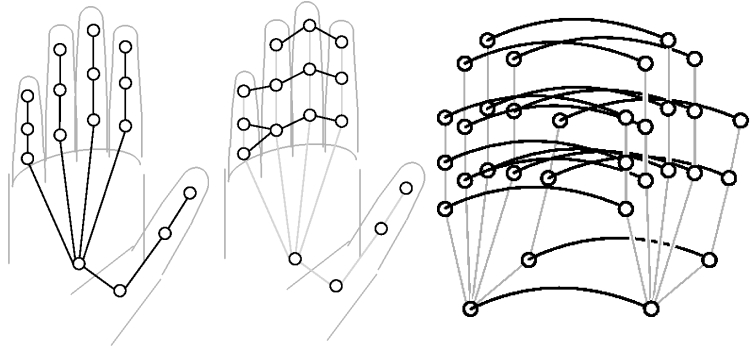

We represent the pose of each hand component by its 3D position and orientation (a unit quaternion). A graphical model is then used to describe the various physical constraints on the hand's motion:

The left graph describes the kinematic constraints imposed by the revolute joints connecting different hand components. The middle graph imposes additional structural constraints corresponding to the fact that two fingers cannot occupy the same 3D volume. Finally, the right graph describes the dynamical structure relating hand poses at subsequent video frames. The overall graphical prior model is then the union of these kinematic, structural, and dynamic constraints. For more details on this probabilistic model, see [1,2].

Imaging Model

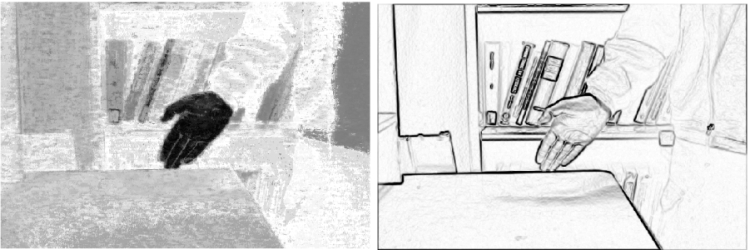

Our hand tracking system is based on a set of efficiently computed edge and color cues. Skin colored pixels have predictable statistics, which we model using a histogram distribution estimated from training patches [12]. We also estimate a similar histogram for the color distribution of background regions. Then, assuming pixels are independent, we may efficiently compute the likelihood of any 3D pose by determining the corresponding silhouette, and summing the skin color evidence across all pixels in this silhouette. The left image below shows an example of the likelihood ratios corresponding to this skin color cue (darker pixels are more likely to be skin):

Another hand tracking cue comes from intensity gradients typically found along boundaries between the hand model and the background scene. As with the skin cue, we build histogram models for the response of derivative of Gaussian filters along the hand's boundary. The likelihood of a 3D pose is then determined by summing edge evidence (right image above) across all boundary pixels.

Tracking Using Nonparametric Belief Propagation

We develop our hand tracker using belief propagation (BP) [13], a method for solving inference problems via local message-passing. Because the pose of each rigid body is a six-dimensional continuous variable, the discretization usually used to implement BP is intractable. Instead, we employ nonparametric, particle-based approximations to BP messages using the nonparametric belief propagation (NBP) algorithm [7].

In NBP, most messages are represented by kernel density estimates, which use Gaussian kernels to interpolate between samples drawn from the underlying densities. Monte Carlo methods adapted to the structure of the hand model potentials are then used to stochastically approximate the true BP message updates [1]. By introducing auxiliary variables to model the occlusion process, and then analytically averaging over these variables, we can also efficiently account for self-occlusions while retaining NBP's distributed structure [2].

The following images show tracking results, in which the modes of our 3D hand pose estimates have been projected into the image plane, with intensity proportional to their likelihood. For videos showing additional results, see the NBP web page. Investigations of more complex motion sequences, and methods for learning richer dynamical and appearance models, are ongoing.

References

[1] E. B. Sudderth, M. I. Mandel, W. T. Freeman, and A. S. Willsky. Visual Hand Tracking Using Nonparametric Belief Propagation. In CVPR Workshop on Generative Model Based Vision, June 2004.

[2] E. B. Sudderth, M. I. Mandel, W. T. Freeman, and A. S. Willsky. Distributed Occlusion Reasoning for Tracking with Nonparametric Belief Propagation. In Neural Information Processing Systems, Dec. 2004.

[3] J. M. Rehg and T. Kanade. DigitEyes: Vision-Based Hand Tracking for Human-Computer Interaction. In Proc. IEEE Workshop on Non-Rigid and Articulated Objects, 1994.

[4] J. MacCormick and M. Isard. Partitioned Sampling, Articulated Objects, and Interface-Quality Hand Tracking. In European Conference on Computer Vision, vol. 2, pp. 3-19, 2000.

[5] Y. Wu, J. Y. Lin, and T. S. Huang. Capturing Natural Hand Articulation. In International Conf. on Computer Vision, 2001.

[6] B. Stenger, A. Thayananthan, P. H. S. Torr, and R. Cipolla. Filtering Using a Tree-Based Estimator. In International Conf. on Computer Vision, pp. 1063-1070, 2003.

[7] E. B. Sudderth, A. T. Ihler, W. T. Freeman, and A. S. Willsky. Nonparametric Belief Propagation. In IEEE Conf. on Computer Vision and Pattern Recognition, vol. 1, pp. 605-612, 2003.

[8] D. Ramanan and D. A. Forsyth. Finding and Tracking People from the Bottom Up. In IEEE Conf. on Computer Vision and Pattern Recognition, vol. 2, pp. 467-474, 2003.

[9] J. Coughlan and H. Shen. Shape Matching with Belief Propagation. In CVPR Workshop on Generative Model Based Vision, June 2004.

[10] Y. Wu, G. Hua, and T. Yu. Tracking Articulated Body by Dynamic Markov Network. In International Conf. on Computer Vision, pp. 1094-1101, 2003.

[11] L. Sigal, S. Bhatia, S. Roth, M. J. Black, and M. Isard. Tracking Loose-Limbed People. In IEEE Conf. on Computer Vision and Pattern Recognition, vol. 1, pp. 421-428, 2004.

[12] M. J. Jones and J. M. Rehg. Statistical Color Models with Application to Skin Detection. Int. J. Computer Vision 46(1), pp. 81-96, 2002.

[13] J. Pearl. Probabilistic Reasoning in Intelligent Systems. Morgan Kaufman, San Mateo, 1988.

The Stata Center, Building 32 - 32 Vassar Street - Cambridge, MA 02139 - USA tel:+1-617-253-0073 - publications@csail.mit.edu (Note: On July 1, 2003, the AI Lab and LCS merged to form CSAIL.) |