Feature Estimation for Gesture Recognition

Sy Bor Wang & David Demirdjian

Problem

During natural human interaction, similar arm gestures can convey very different meanings. This usually represents a real challenge for gesture recognition algorithms. Hidden Markov Models (HMMs) [1] have been used extensively for gesture recognition. Such models usually perform well when the number of gestures to recognize is small. However their performance usually decreases tremendously as the number and similarity of the gestures grows. We show here how Linear Discriminant Analysis (LDA), a technique usually used in speech recognition, can improve the performance of a gesture recognition system. In brief, LDA estimates a new space from the initial input space, in which features corresponding to different gesture units (gestemes) are well separated.

Approach

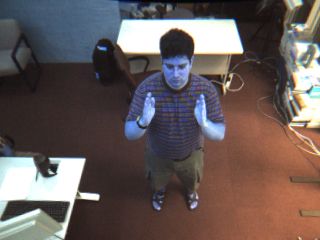

In this work, we used a stereo-based camera to track the body pose of the user using a real-time algorithm developed by our group [4]. Our approach consists in estimating some features, computed from the body pose, which are fed to an HMM-based approach for gesture recognition. An example of the tracking results is shown in the Figure 1 below.

![]() ">

">![]()

Figure 1 : Original image (left) and estimated pose (right) of a user

Drawing insight from feature separation of phonemes in speech recognition [2,3], we propose to model body gestures using elementary units, called "gestemes". Gestemes are estimated as follow. Our input feature vectors are defined as “joint-pose” vectors containing consecutive poses (this allows to capture both static and dynamic information about the poses). We assume that there are B gestemes that model all the feature vectors in N classes of gestures. A Gaussian Mixture Model containing B centers was estimated using a set of training feature vectors. All the Gaussians are assumed to have full covariances. Parameters for the B Gaussians are determined from the set of training feature vectors, using the EM algorithm to maximize a global likelihood function. Once this likelihood is maximized, each Gaussian cluster is assigned a unique label.

After the classification of the features into gesteme clusters, a projection matrix L that separates the features in an optimal way is estimated by running Linear Discriminant Analysis on the training data.

When body poses are estimated by the tracking algorithm, “joint-pose” vectors are formed and projected using the LDA projection matrix. The resulting features are then used in an HMM-based framework to classify the different gestures.

Experiments

We labeled 11 gestures for the HMM-based recognizer to classify. We performed some data collection and gathered some gesture data from 13 users. These users were asked to perform the gestures in front of a stereo camera, and their body pose sequences of these gestures were collected. Half of these samples were used for training the HMM recognizer and for linear discriminant analysis, while the other half was used for testing. Examples of these gestures are shown in the Figure 2 below.

Figure 2 : Image of body poses articulating 4 different gestures.

We compared our LDA-based approach against using only body poses as features for the Hidden Markov Model. We evaluated the gesture recognition error rates of the different techniques and the results are tabulated below:

| Pose features | LDA features |

| 12.16% | 7.98% |

Research Support

This research was carried out in the Vision Interface Group, which is supported in part by DARPA, Project Oxygen, NTT, Ford, CMI, and ITRI. This project was supported by one or more of these sponsors, and/or by external fellowships.

References:

[1] L. R. Rabiner. A tutorial on hidden markov models and selected applications in speech recognition. In IEEE, pp. 257-286, Vol. 77, 2002.

[2] X. Huang and A. Acero and H. Hon. Spoken Language Processing. pp. 426-428, 2001.

[3] C. Hundtofte, G. Hager and A. Okamura. Building of a Task Language for Segmentation and Recognition of User Input to Cooperative Manipulation Systems. In IEEE Virtual Reality Conference, Orlando, Florida, 2002.

[4] D. Demirdjian, T. Ko and T. Darrell. Constraining Human Body Tracking. In Proceedings of ICCV’03, Nice, France, October 2003.

The Stata Center, Building 32 - 32 Vassar Street - Cambridge, MA 02139 - USA tel:+1-617-253-0073 - publications@csail.mit.edu (Note: On July 1, 2003, the AI Lab and LCS merged to form CSAIL.) |