| Technical Reports | Work Products | Research Abstracts | Historical Collections |

![]()

|

Research

Abstracts - 2006

|

Using Multiple Segmentations to Discover Objects and their Extent in Image CollectionsBryan C. Russell, Alexei A. Efros, Josef Sivic, William T. Freeman & Andrew ZissermanThe Problem:We seek to discover the object categories depicted in a set of unlabelled images and extract their spatial extent. We demonstrate improved object discovery results by incorporating information produced from multiple segmentations of the input image. Motivation:Common approaches to object recognition involve some form of supervision. This may range from specifying the object's location and segmentation, as in face detection [1,2], to providing only auxiliary data indicating the object's identity [3,4,5,6]. For a large dataset, any annotation is expensive, or may introduce unforeseen biases. Results in speech recognition and machine translation highlight the importance of huge amounts of training data. The quantity of good, unsupervised training data -- the set of still images -- is orders of magnitude larger than the visual data available with annotation. Thus, one would like to observe many images and infer models for the classes of visual objects contained within them without supervision. Previous approaches for object discovery developed the analogy of images as text documents by forming the visual analogue of a word through vector quantization of SIFT-like region descriptors [7,8]. Topic discovery techniques from the statistical text mining community were used to discover visual object classes [9,10,11]. However, with these approaches, the features that discriminate between object classes are not well separated, especially in the presence of significant background clutter, and the implied object segmentations are noisy. What we need is a way to group visual words spatially to make them more descriptive. Approach:Given a large, unlabeled collection of images, our goal is to automatically discover object categories with the objects segmented out from the background. For each image in the collection, we compute multiple candidate segmentations using Normalized Cuts [12]. For each segment in each segmentation, we compute a histogram of visual words [8]. We perform topic discovery on the set of all segments in the image collection using Latent Dirichlet Allocation [13,14], treating each segment as a document. For each discovered topic, we sort all segments by how well they are explained by this topic. The result is a set of discovered topics, ordered by their explanatory power over their best-explained segments. Within each topic, the top-ranked discovered segments will correspond to the objects within that topic. Results:We used the Caltech 101 [15], MSRC [16], and LabelMe [17] datasets to test our approach. Figure 1 shows how multiple candidate segmentations are used for object discovery. Figures 2-4 show montages of the top segments for a discovered object category. For more details, please refer to [18].

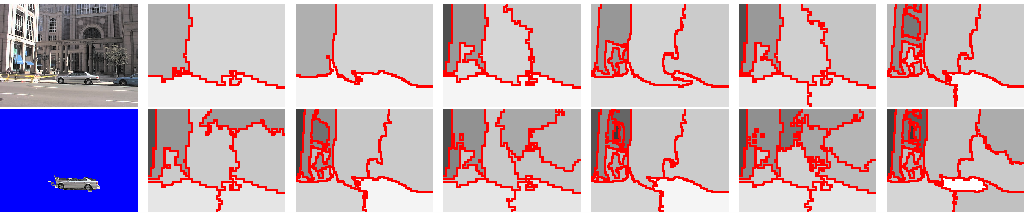

Figure 1: How multiple candidate segmentations are used for object discovery. The top left image is the input image, which is segmented, using Normalized Cuts [12] at different parameter settings, into 12 different sets of candidate regions. The explanatory power of each candidate region is evaluated by how well it is explained by the discovered topic. We illustrate the resulting rank by the brightness of each region. The image data of the top-ranked candidate region is shown in the bottom left, confirming that the top-ranked regions usually correspond to objects.

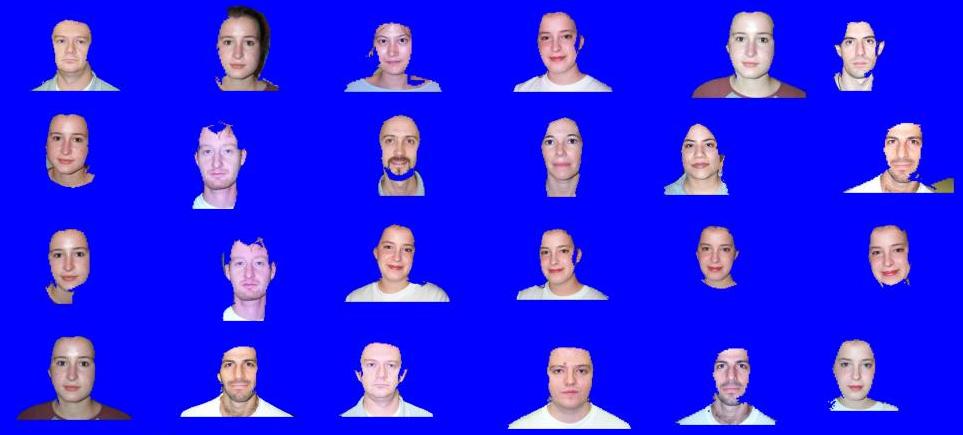

Figure 2: Top segments for one topic (out of 10) discovered in the Caltech 101 dataset [15]. Note how the discovered segments, learned from a collection of unlabelled images correspond to faces.

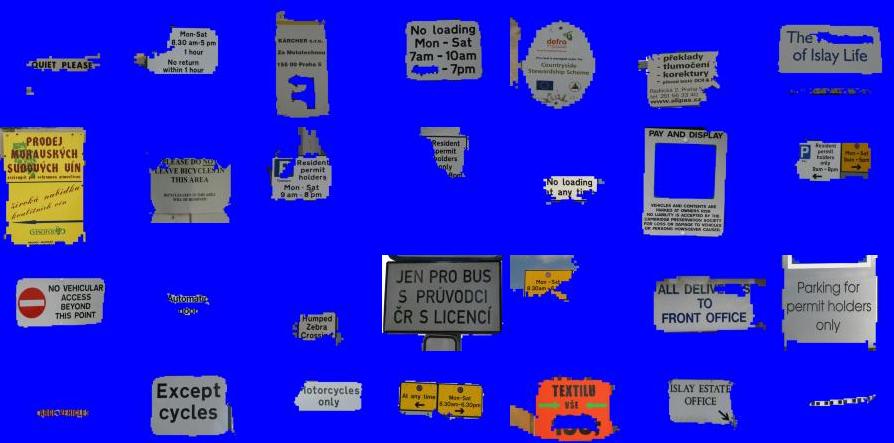

Figure 3: Top segments for one topic (out of 25) discovered in the MSRC dataset [16]. Note how the discovered segments, learned from a collection of unlabelled images correspond to signs.

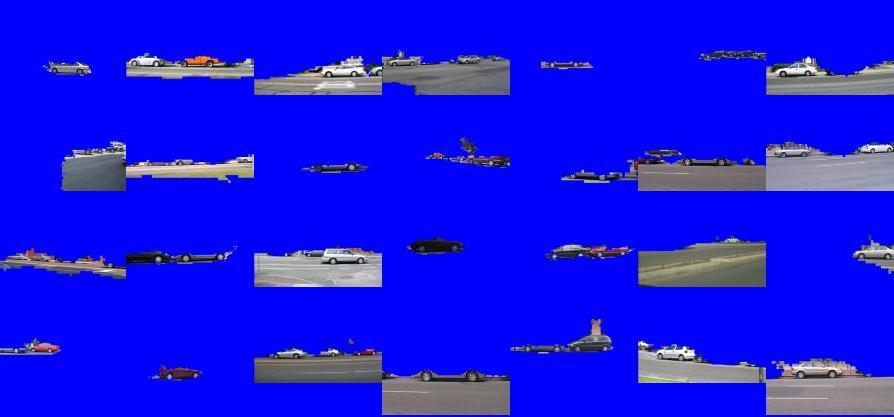

Figure 4: Top segments for one topic (out of 20) discovered in the LabelMe dataset [17]. Note how the discovered segments, learned from a collection of unlabelled images correspond to cars. Research Support:This work was sponsored in part by the EU Project CogViSys, the University of Oxford, Shell Oil (grant #6896597), and the National Geospatial-Intelligence Agency (grant #6896949). References:[1] H. Schneiderman and T. Kanade. A statistical method for 3D object detection applied to faces and cars. In Proc. ICCV, 2003. [2] P. Viola and M. Jones. Rapid object detection using a boosted cascade of simple features. In Proc. CVPR, 2001. [3] K. Barnard, P. Duygulu, N. de Freitas, D. Forsyth, D. Blei, and M. Jordan. Matching words and pictures. Journal of Machine Learning Research, 3:1107-1135, 2003. [4] P. Duygulu, K. Barnard, J.F.G. de Freitas, and D. Forsyth. Object recognition as machine translation: Learning a lexicon for a fixed image vocabulary. In Proc. ECCV, 2002. [5] R. Fergus, P. Perona, and A. Zisserman. Object class recognition by unsupervised scale-invariant learning. In Proc. CVPR, 2003. [6] M. Weber, M. Welling, and P. Perona. Unsupervised learning of models for recognition. In Proc. ECCV, pages 18-32, 2000. [7] D. Lowe. Object recognition from local scale-invariant features. In Proc. ICCV, pages 1150-1157, 1999. [8] J. Sivic and A. Zisserman. Video Google: A text retrieval approach to object matching in videos. IEEE International Conference on Computer Vision, October, 2003. [9] J. Sivic, B. C. Russell, A. A. Efros, A. Zisserman, W. T. Freeman. Discovering objects and their location in images. IEEE International Conference on Computer Vision, October, 2005. [10] J. Sivic, B. C. Russell, A. A. Efros, A. Zisserman, W. T. Freeman. Discovering object categories in image collections. MIT AI Lab Memo AIM-2005-005, February, 2005. [11] P. Quelhas, F. Monay, J. M. Odobez, D. Gatica-Perez, T. Tuytelaars, and L. V. Gool. Modeling scenes with local descriptors and latent aspects. IEEE International Conference on Computer Vision, October, 2005. [12] J. Shi and J. Malik. Normalized cuts and image segmentation. IEEE International Conference on Computer Vision, 1997. [13] D. Blei, A. Ng, and M. Jordan. Latent Dirichlet allocation. Journal of Machine Learning Research, 3:993-1022, 2003. [14] T. Hofmann. Unsupervised learning by probabilistic latent semantic analysis. Machine Learning, 43:177-196, 2001. [15] L. Fei-Fei, R. Fergus, and P. Perona. A Bayesian approach to unsupervised one-shot learning of object categories. In Proc. ICCV, 2003. [16] J. Winn, A. Criminisi, and T. Minka. Object categorization by learned universal visual dictionary. In IEEE Intl. Conf. on Computer Vision, 2005. [17] B. C. Russell, A. Torralba, K. P. Murphy, and W. T. Freeman. LabelMe: a database and web-based tool for image annotation. Technical report, MIT AI Lab Memo AIM-2005-025, 2005. [18] B. C. Russell, A. A. Efros, J. Sivic, W. T. Freeman, and A. Zisserman. Using Multiple Segmentations to Discover Objects and their Extent in Image Collections. IEEE Conference on Computer Vision and Patter Recognition, June, 2006. |

||||

|