| Technical Reports | Work Products | Research Abstracts | Historical Collections |

![]()

|

Research

Abstracts - 2006

|

Robot Learning of Tool Manipulation from Human DemonstrationAaron Edsinger & Charles C. KempIntroduction

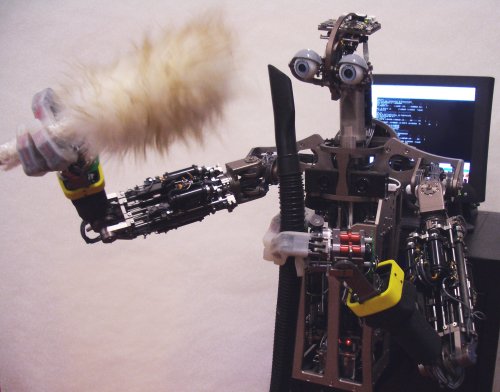

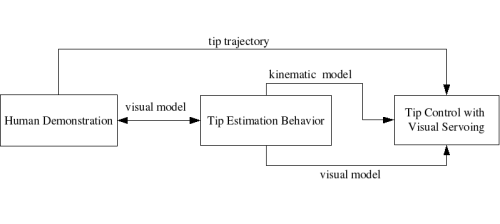

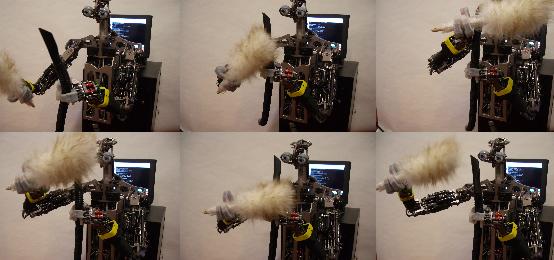

Robots that manipulate everyday tools in unstructured, human settings could more easily work with people and perform tasks that are important to people. Task demonstration could serve as an intuitive way for people to program robots to perform tasks. By focusing on task-relevant features during both the demonstration and the execution of a task, a robot could more robustly emulate the important characteristics of the task and generalize what it has learned. Focusing on a task relevant feature, such as the tip of a tool, is advantageous for task learning. In the case of tool use, it emphasizes control of the tool rather than control of the body. This could allow the system to generalize what it has learned across unexpected constraints such as obstacles, since it does not needlessly restrict the robot's posture. It also presents the possibility of generalizing what it has learned across manipulators. For example, a tool could be held by the hand, the foot, or the elbow and still be used to achieve the same task by controlling the tip in the same way. We are developing methods for robot task learning that makes use of the perception and control of the tip of a tool. For this approach, the robot monitors the tool's tip during human use, extracts the trajectory of this task relevant feature, and then manipulates the tool by controlling this feature. We have demonstrated our approach on a 29 DOF humanoid robot[1]. In one example, the robot learns to clean a flexible hose with a brush. This task is accomplished in an unstructured environment without prior models of the objects or task. Background We have previously presented a method that combines edge motion and shape to detect the tip of an unmodeled tool and estimate its 3D position with respect to the robot's hand[2][3]. This method has been successfully demonstrated on the above tools. In this approach, the robot rotates the tool while using optical flow to find rapidly moving edges that form an approximately semi-circular shape at some scale and position. At each time step, the scale and position with the strongest response serves as a 2D tool tip detection. The robot then finds the 3D position with respect to its hand that best explains these noisy 2D detections. This method was shown to perform well on the wide variety of tools. In related work, Piater and Grupen [4] showed that task relevant visual features can be learned to assist with grasp preshaping. The work was conducted largely in simulation using planar objects, such as a square and triangle. Pollard and Hodgins have [5] used visual estimates of an object's center of mass and point of contact with a table as task relevant features for object tumbling. While these features allowed a robot to generalize learning across objects, the perception of these features required complex fiducial markers. Experiment Overview of the robot task learning framework. The robot watches a human demonstration from which it extracts a tool tip trajectory and potentially a visual model of the tool tip. Once the tools are in the robot's hands, the robot uses its tip estimation behavior to create a visual model of the tip and extend its kinematic model to include the tool. Finally the robot controls the tip to follow the learned trajectory using visual servoing in conjunction with its kinematic model The output of the tip detector and tracker for two human demonstration sequences. The white curve shows the estimated tip trajectory over the whole sequence and the black cross shows the position of the tip detection in the frame. The top sequence shows a demonstration of pouring with a bottle and the bottom sequence shows a demonstration of brushing.

We conducted preliminary testing of our method on a brushing task where the robot is to brush a flexible hose held in its hand. The robot's passive compliance and force control allow it to robustly maintain contact between the brush and the object despite kinematic and perceptual uncertainly. We use complementary control schemes for distinct parts of task execution. Bringing the tool tip into the general vicinity of the point of action can be performed rapidly in an open-loop fashion using the kinematic model. Once the tip is near the point of action, visual servoing can be used to carefully bring the tip into contact with the point of action. Finally, as the tool tip gets close to the point of action, tactile and force sensing coupled with low stiffness control can be used to maintain contact between the tip and the point of action. For the task, the robot waves each grasped object in front of the two cameras for about 15 seconds, and an estimate of the objects position in the hand is computed. A visual model for each object and each camera is then computed over the data-stream generated during the estimation process. Each visual model automatically instantiates an object tip tracker and the robot begins visual servoing of the two tips according to the task trajectory. The visual position and orientation trajectory of each tip, during demonstration, were previously computed from human demonstration. Research SupportThis research is supported in part by Toyota Motor Corporation. References[1] Aaron Edsinger-Gonzales and Jeff Weber. Domo: A Force Sensing Humanoid Robot for Manipulation Research. In Proceedings of the 2004 IEEE International Conference on Humanoid Robots, Santa Monica, Los Angeles, CA, USA. November, 2004. [2] Charles C. Kemp and Aaron Edsinger. Robot manipulation of human tools: Autonomous detection and control of task relevant features. In Submission to: 5th IEEE International Conference on Development and Learning (ICDL-06)}, Bloomington, Indiana, 2006. [3] Charles C. Kemp and Aaron Edsinger. Visual Tool Tip Detection and Position Estimation for Robotic Manipulation of Unknown Human Tools. Technical Report AIM-2005-037, MIT Computer Science and Artificial Intelligence Laboratory, 2005 [4] Justus H. Piater and Roderic A. Grupen.Learning appearance features to support robotic manipulation.. In Proceedings of the Cognitive Vision Workshop, 2002. [5] N.Pollard and J.K. Hodgins.Generalizing Demonstrated Manipulation Tasks. In Proceedings of the Workshop on the Algorithmic Foundations of Robotics (WAFR '02), 2002. |

||||

|