| Technical Reports | Work Products | Research Abstracts | Historical Collections |

![]()

|

Research

Abstracts - 2006

|

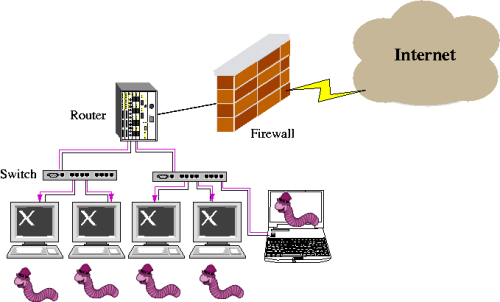

On the Adaptive Real-Time Detection of Fast-Propagating Network WormsJaeyeon Jung, Rodolfo A. Milito & Vern PaxsonIntroductionIf a network worm penetrates a site's perimeter, it can quickly spread to other vulnerable hosts inside the site. The infection propagates by the compromised host repeatedly attempting to contact and infect new potential victims. Figure 1 illustrates a situation where a firewall becomes ineffective when a user connects his infected laptop directly to the network. Hence, many networks employ a system that monitors outgoing traffic from local hosts with an attempt to quickly identify an infected host to prevent further damage.

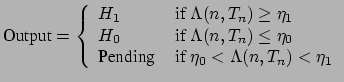

Figure 1: Peripheral defense is not enough to prevent worm propagation The traffic pattern of fast worm propagation---a single host quickly contacting many different hosts---is a prominent feature across a number of types of worms, and detecting such patterns constitutes the basis for several worm detection approaches [1][2][3]. However, the diversity of traffic makes it difficult to tune existing worm detection methods that presume preselected thresholds for connection rates and window sizes over which to compute whether a host's activity is "too quick". Finding a single threshold rate that accommodates all (or almost all) benign hosts requires excessive tuning because of diverse application behaviors (e.g., a Web browser generating multiple concurrent connections to fetch embedded objects vs. an SSH client connecting to a server). We develop an algorithm for detecting fast-propagating worms based on the rate at which the infected hosts initiate connections to new destinations. The foundation of our algorithm, RBS (rate-based sequential hypothesis testing), derives from the theory of sequential hypothesis testing, the use of which for detecting randomly scanning hosts was first introduced by our previous work with TRW [4]. The sequential hypothesis testing methodology enables engineering the detectors to meet false positives and false negatives targets, rather than triggering when fixed thresholds are crossed. RBS: Rate-Based Sequential Hypothesis TestingRBS operates on a per-host and per-connection basis and does not require access to packet contents. It is built on a probabilistic model of a typical benign host's network behavior that a benign host rarely generates more than a few first-connect connections per second, and the time intervals between those connections can be approximately modeled as exponentially distributed. Let Define Based on Equation (1), we can develop a sequential hypothesis test in which we define the likelihood ratio as: and the detection rules as:  where we can set Wald shows that setting thresholds as above guarantees

that the resulting false positive rate is bounded by

There are four parameters to set in order to run RBS. First, Since a host's instantaneous first-contact rate can vary a great deal

over time, RBS needs to estimate whether the current behavior provides

evidence strong enough to choose one hypothesis against the other. For

instance, if a host has initiated Likewise, we can obtain a threshold elapsed time

Finally, Equations (5) (6)

show that RBS's decision is made based on two parameters -- the number

of attempts, Results Table 1 shows the simulation results of RBS

for the trace data collected from Lawrence Berkeley Laboratory as we vary

This evaluation result shows that when the factor of speed difference,

ConclusionWhile built using a model that the time between connection attempts to new destinations is exponentially distributed (which we show is a reasonable approximation for bursts of activity), RBS decisions reflect the aggregate measurement of the total elapsed time over a number of attempts, not the characteristics of individual arrivals. We define RBS in terms of a single discriminating metric--the rate of connection attempts--which differs substantially between benign hosts and an important class of worms. While the choice of such a metric evokes the measurement of an average rate over a window of certain size (and the comparison of the measured rate to a fixed threshold), RBS is more elaborate. The algorithm draws from sequential hypothesis testing the ability to adapt its decision-making in response to the available measurements in order to meet specified error requirements. We can view this as an adaptation of both the window size (i.e., how many attempts to make a decision) and the threshold (i.e., what is the minimum measured rate over that window that leads to a trigger). This adaptation gives RBS a robustness unseen in fixed window/threshold schemes. Research SupportWe gratefully acknowledge funding from the National Science Foundation under Cooperative Agreement No. ANI-0225660 and Electronics & Telecommunications Research Institute, Korea. References[1] Jamie Twycross and Matthew M. Williamson. Implementing and Testing a Virus Throttle. In Proceedings of the 12th USENIX Security Symposium, August 2003. [2] Stuart E. Schechter, Jaeyeon Jung and Arthur W. Berger. Fast Detection of Scanning Worm Infections In Proceedings of the 7th International Symposium on Recent Advances in Intrusion Detection (RAID'04), September 2004. [3] Shigang Chen and Yong Tang. Slowing Down Internet Worms. In Proceedings of the 24th International Conference on Distributed Computing Systems (ICDCS'04), March 2004. [4] Jaeyeon Jung, Vern Paxson, Arthur W. Berger and Hari Balakrishnan. Fast Portscan Detection Using Sequential Hypothesis Testing. In Proceedings of the IEEE Symposium on Security and Privacy, May 2004. [5] Abraham Wald. Sequential Analysis. J. Wiley & Sons, New York, 1947. |

|||||

|