| Technical Reports | Work Products | Research Abstracts | Historical Collections |

![]()

|

Research

Abstracts - 2006

|

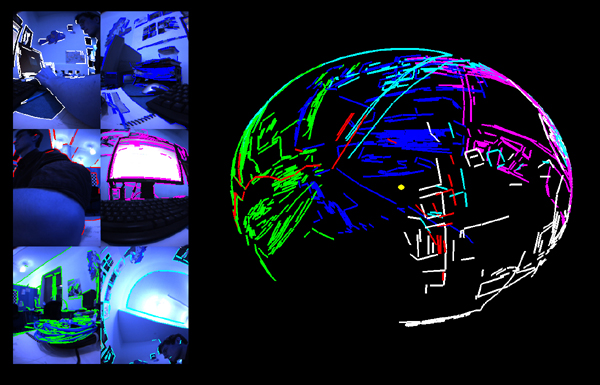

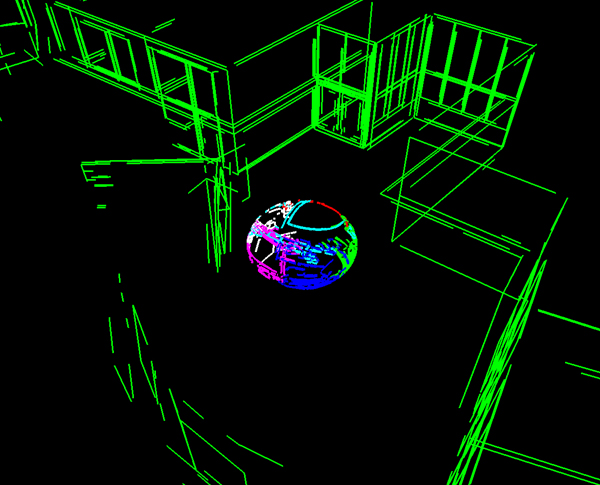

Fine-Grained 6-DOF Localization from Known StructureAuthor O. Koch & S. TellerIntroductionFast and robust localization remains a key challenge in robotics today. The goal of our project is to provide real-time 3D localization given an omnivision camera and a rough 3D model of the environment. The key idea is to maintain a set of correspondences between the observed features and the real-world features. The 6-DOF pose of the camera is computed by minimizing a cost function based on the reprojection error. This algorithm allows to update the camera pose (position and orientation) assuming a continuous motion. We also demonstrate how to initialize the system at startup (i.e create a set of correspondences from the void). Image features vs real-world featuresWe use 2D edges as image features. Edges are fast to compute, easy to track, and more reliable than point features. Since our omnivision system is subject to high distortion, edges are rectified using a spherical distortion model and projected onto a virtual sphere centered on the camera center (fig. 1). We use 3D lines as real-world features. Figure 2 shows a rough 3D model of the lab area 32-33x.  Figure 1: observed features (left) and their spherical representation (right). Edges are color-coded by camera. Notice the overlap between the cameras. The maintainance phaseIn the maintainance phase, a set of correspondences between the image edges and the real-world 3D lines is available. These correspondences are maintained as the camera moves and used to compute the new camera pose given the previous camera pose as initial guess. This process is expected to be fast and robust if a sufficient number of correspondences is given (a dozen at least). Since the camera has a very wide field of view, our system is naturally robust to occlusion. To combat clutter, we observe that, at any point in time, the current camera pose can provide a rough estimate of where the real-world features should appear in the camera space. Thus, most of the image space can be disregarded for edge detection. This principle makes the edge detector faster and prevents it from introducing clutter edges in the pose solver.  Figure 2: correspondences are maintained between the observed edges (on the sphere) and the real-world features (in green). The initialization phaseIn the initialization phase, the camera is in the void and no prior information is available about the camera pose. Doing a brute-force matching between the observed features and the world features would be clearly untractable. Instead, we show that a three correspondences are enough to obtain a good estimate of the camera pose. To find such three correspondences, we develop a RANSAC framework that matches random triplets of image features against random triplets of real-world features. Testing whether three given real-world lines could have generated three given image edges can be done very quickly without having to compute the corresponding camera pose. In fact, the test fails most of the time, which makes the RANSAC algorithm very fast. Once a successful configuration has been reached, the camera pose is computed and refined using the remaining real-world features. If a sufficient number of them find a match in the image space, the initialization phase is over. The initialization phase can be made even quicker using constraints such as imposing a vertical position to the camera at startup. Real-time end-to-end architectureWe strive to build a real-time system capable of demonstrating successful localization over a long period of time (one hour) on a real-world, complex environment (several buildings). To this end, we will develop a framework able to (1) run a successful initialization, (2) stay in maintainance phase for long periods of time and (3) recover from a loss of correspondences fairly quickly. References:[1] Bartoli et al., Motion From 3D Line Correspondences: Linear and Non-Linear Solutions, roceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Madison, Wisconsin, USA, pp. 477-484, June 2003. [2] M. Bosse, R. Rikoski, J. Leonard, S. Teller, Vanishing Points And 3d Lines From Omnidirectional Video, Proceedings of ICIP 2002, Rochester, NY, pp. 513-516, September 2002. [3] S. Coorg and S. Teller, Matching and Pose Refinement with Camera Pose Estimates, MIT Technical Report MIT/LCS/TM-561, 1996. [4] P.I. Corke, D. Strelow, and S. Singh, Omnidirectional visual odometry for a planetary rover, In Proceedings of IROS 2004, 2004. [5] Drummond et al., Real-Time Tracking of Complex Structures for Visual Servoing, In Workshop on Vision Algorithms, pp. 69-84, 1999. [6] O. Faugeras and Q. Luong and T. Papadopoulou, The Geometry of Multiple Images: The Laws That Govern The Formation of Images of A Scene and Some of Their Applications, ISBN 0262062208, MIT Press, Cambridge, MA USA, 2001. [7] R. Hartley and A. Zisserman, Multiple View Geometry in Computer Vision, First Edition, Cambridge University Press, ISBN: 0521623049, 2000. [8] B.K.P Horn, Closed-Form Solution of Absolute Orientation Using Unit Quaternions, Journal of the Optical Society of America, vol. 4, number 4, April 1987. [9] Taylor et al., Structure-from-motion from line segments in multiple images, In IEEE Int. Conf. on Robotics and Automation, pages 1615--1620, 1992. |

||||

|