| Technical Reports | Work Products | Research Abstracts | Historical Collections |

![]()

|

Research

Abstracts - 2006

|

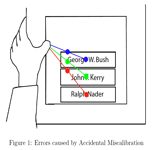

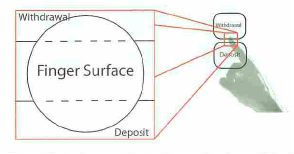

Improving the Reliability of TouchscreensChristopher Leung & Larry RudolphThe Challenge of TouchScreensService kiosks are rapidly becoming more prevalent in today's increasingly fast-paced world. They can be found as ticket dispensers at movie theaters, financial service provider ATMs, and check-in terminals at airports. Kiosks serve a cheap, quick, and convenient method of delivering services and information to the general public, and these finger-operated touchscreens provide a natural and intuitive method of input for novice users. As touchscreens become more common, users will depend on them more heavily and come to demand more of these machines. Although the trend of kiosk popularity has continued for some time, touchscreens are still notorious for their inaccuracy. Even slight problems of miscalibration can lead to consistent errors in selection. This problem can quickly lead to user frustration, and if ignored, can offset the advantages that a simplistic touchscreen interface provides. This work explores a novel method that attempts to handle these problems, and improve the usability of touchscreen kiosks. Kiosks cannot eliminate human error completely, as long as people are still interacting with them, but there are ways of coping with error and incorporating a degree of "sloppiness" into interpreting users' actions. Fingers are not perfect input devices. Although fingers are very skilled at certain tasks, they are not meant to be extremely precise when it comes to pointing. Users can use their fingers to indicate the general area of interest very accurately and quickly, but when the sizes of areas of interest fall below a certain threshold (the size of the surface of the finger), fingers are wildly unpredictable. When this situation is coupled with a slightly miscalibrated screen, user interaction can be nightmarish. These observations have resulted in a more precise model for fingers. Fingers come with inherent unpredictability with regard to this respect, and that unpredictability can never be eliminated. However, it is possible to design systems that can accommodate these shortcomings. In order to take advantage of the strengths of fingers, it is necessary to understand the actions fingers are intended for, which include pointing, touching, and drawing. We provide novel interaction techniques that provide a more robust method of interaction, modelled specifically to account for the imprecise nature of fingers. One idea of natural interaction that has largely been overlooked in the past is the notion of gestures drawn on the touchscreen. On many small, portable devices such as PDA's and tablet PC's, gesture recognition of strokes drawn onscreen has become common. Most people have some skill at using their fingers to reliably draw simple shapes. However, this technique has been tailored more towards handwriting recognition, rather than the execution of actions. Natural gestures provide the user a set of shortcuts to accelerate transactions. One of our goals was to implement a novel way of executing commands on the kiosk that was flexible and easily scalable. To achieve this, we developed a finger-based gesture recognition system allowed the user to execute commands on the kiosk with a simple wave of the finger. One prominent advantage of providing support for gestural input is that supporting gestures take up no additional room onscreen. Touchscreens are not usually large, and real estate onscreen is precious. By supplementing existing systems to support gestures, systems can provide another method of robust input without using additional space. Additionally, gestures can be quickly and easily learned. More importantly, gestures are composed of relative movements. Even if the user accidentally rests their free hand on the screen, the movements of the finger on the screen are relative, and thus do not cause problems similar to those of pointing. Although the location of the gesture may be o in this case, the shape of the gesture nevertheless remains the same. This is a crucial feature of finger gesturing that handles miscalibration cleanly and robustly. With the finger gesturing system, each gesture "begins" once the user places his finger on the touchscreen. If the finger is removed from the touchscreen without moving across the the screen, the touch is considered as a normal mouse click. However, if a user's finger is dragged across the screen, the system begins to take notice. Finger movement across the screen is recognized, and each incremental gesture of the user's finger movements is stored as a character, which is appended to the gesture string. Once the user lifts his finger from the screen, the gesture string is interpreted to determine if the gesture was a valid or not. The primary method of finger stroke recognition comes from mapping each incremental drag of the finger to a direction on a control pad. Once the finger is placed on the screen, every subsequent move along the face of the screen is mapped to either a move up, down, left, right, or diagonally up-right, up-left, down-right, down-left. Each gesture was represented by a string of characters, each representing a direction. Though this implementation is fairly simple, it proved to be very e ective. Each time the mouse was dragged farther than the minimum threshold for movement (which after some experimentation worked optimally at 10 pixels) from the past recorded point, it was immediately analyzed to determine the direction of movement. Once the direction was determined, a character representing that direction was appended to the current string. Once the gesture was completed, the expression could be analyzed to see if fit the format of a defined gesture. Continuing with the example of the check mark, any gesture that generally moved down and to the right, then up and to the right should be interpreted as a check mark. One possible regular expression that characterizes a check mark could be: [DR3]+[UR9]+ Since no two identical strokes can be recorded consecutively (such as RR or DD), this simple expression is powerful enough to recognize a large variety of check marks, without confusion with other types of gestures. By examining the expression more closely, we can decipher that the expression recognized gesture strings that represent at least some movement down and/or right, and then some movement up and/or right. These types of gestures should classify as check marks. In most cases, the regular expression above is robust enough to recognize almost any check mark shape, regardless of position onscreen or sloppiness on the part of the user. Using regular expressions to classify gesture strings proved to be extremely powerful; this relatively simple expression above was able to recognize a large range of sloppily-drawn check marks correctly.   We are continuing to extend this work to handle all types of touchscreen errors, both for large screens and preliminary results are encouraging. In our user studies, a 70% reduction in the error rate has been observed and screen gestures were, on the average 20% faster than tapping buttons on the screen. This work is part of a general framework that reduces errors in all types of user interactions. AcknowledgementThis work was funded by Project Oxygen. |

||||

|