| Technical Reports | Work Products | Research Abstracts | Historical Collections |

![]()

|

Research

Abstracts - 2006

|

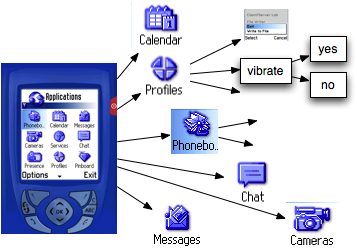

Non-GUI, Dynamic Control of Smart PhonesEmily Yan, Alexander Ram & Larry RudolphProblem StatementSmart phones provide a challenge to the average person to make use of their extensive capabilities. Despite their small size, mobile phones have at least all the capabilities of modern computers yet expect their services to be controlled via their tiny keypads and screens. The keypad and screen are not only used to look up telephone numbers, calendar events, but can also be used to surf the web, edit a video, play games, as well as nearly every function found on modern day personal computers. Navigating the many functions, settings, and services of a PC with a large display and full keyboard is hard enough, but without the ability to simultaneous display lots of alternatives, too much time will be wasted in navigation through menus. There is a need to provide additional way to interact with a phone. Speech is probably the first interaction that comes to mind, although one would prefer full natural language speech rather than merely reciting a sequence of keywords. There are others, such as gestures, scripts, or remote agents. In all these cases, it is necessary to know how and what functions are available on the phone as well as what is available on the phone in its current configuration. For example, while in the middle of recording a video, most phones will not allow one to lookup a name in the phone book The standard solution is to have each application on the phone publish a set of API's or services. Scripts, agents, or the processing component of some input modality then invoke these services or call the appropriate API. For example, with a natural language interface a user might issue the command to take a picture and send it to her advisor. The interface first must understand this command and must then translate it to a script or a series of commands. The camera has a well defined set of published API's as does the email and address-book applications. The rich semantic information in the request along with the aid of a database of phone actions, in theory, provide enough to support such an ability. The challenge, is how to provide the infrastructure on the phone that will support non-GUI interactions without putting restrictions on third-party application developers and without limiting the use to only a few specific phone models. Third party applications may not provide sufficient API's. Documentation for the API's and services on a phone, might be out of date. API's and services that are rarely, if ever, used on the phone may not be debugged. Finally, non-GUI uses of the phone should at the very least be able to do everything that can be done via the GUI and keypad. Our Proposed Solution.

Since the menu items, options, selectable icons, and all the other GUI widgets are well debugged, well understood, and form the universe or a phone's functionality, we propose to make that the basis of all non-GUI interactions as well. The sequence of selections and keypresses forms a tree-like structure. The root of the tree is the default screen. From there, anyone of the icons can be selected. Thus, every one of those items is a child of the root. Each child is an application, that in turn, has a set of menu items and icons that can be selected. Each of these are the children of the application node. Continuing in this fashion, forms a "tree-like" structure. There may be several ways to get to the same "leaf" element, but in this case, we just replicate nodes to maintain the simple tree structure. The tree structure is initially static when the phone is first turned on. As application are added or removed and services enabled or disabled the tree must be dynamically modified. We propose to do this by inserting a "wedge" that captures invocations to the window system, and makes any necessary modifications to the tree. Moreover, the current state of the phone corresponds to some node in the tree, and this too is dynamically maintained. In addition to the windowing actions, services, API's, and views are also dynamically added and removed from the system. We can capture and augment the tree with these as well. Finally, there are several databases on the phone, and although the GUI's provide some limited search, it is more efficient to be able to provide explicit, direct, interaction with the well defined, common databases, such as the address book and calendar. The tree not only defines what can be done, it also shows how to do it. Thus, a non-GUI interface need only specify a destination node in the tree, and perhaps some parameters as well, and our runtime system can follow the path from the current place in the tree to the destination. This is done, once again, by interposing a small wedge into the window system. ExtensionsThe first application of our system will be as a counterpart to a natural language component based on the START system. The START system will take as input a natural language query or command. With database support, the START system will then interact with the tree to generate a sequence of tree nodes, their order, and any other parameters and specifiers that are needed. The actions will then be carried out on the phone. The tree provides rich semantic information as to the functionality of the phone. It also provides a way to correct or disambiguate commands. While at a certain node in the tree, a command need only be semantically "close" to the children or close relatives of the current node, in order to guess what is the appropriate action to take. The commands to toggle a variable are easily identified on the tree (they are leaf subtrees). Their collection gives a clear picture as to the current configuration of the phone and provide rich semantics as to what can and cannot be configured. We hope to understand this and other phone status from the tree. The ultimate goal is to allow everyone, from novice to expert to find and make use of all of our smart phones capabilities. Acknowledgments:This project is funded by Nokia. |

||||

|