| Technical Reports | Work Products | Research Abstracts | Historical Collections |

![]()

|

Research

Abstracts - 2006

|

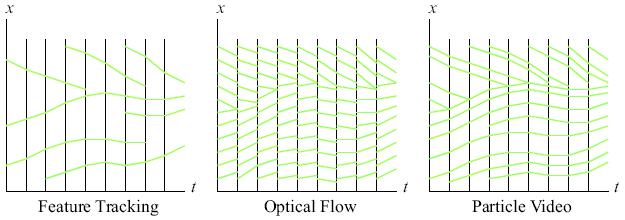

Particle VideoPeter Sand & Seth TellerIntroductionVideo motion estimation is often performed using feature tracking or optical flow. Feature tracking estimates the trajectories over many frames of a sparse set of salient image points, whereas optical flow estimates a dense motion field from one frame to the next. Our goal is to combine these two approaches: to estimate motion that is both spatially dense and temporally long-range. For any image point, we would like to know where the corresponding scene point appears in all other video frames (until the point leaves the field of view or becomes permanently occluded).

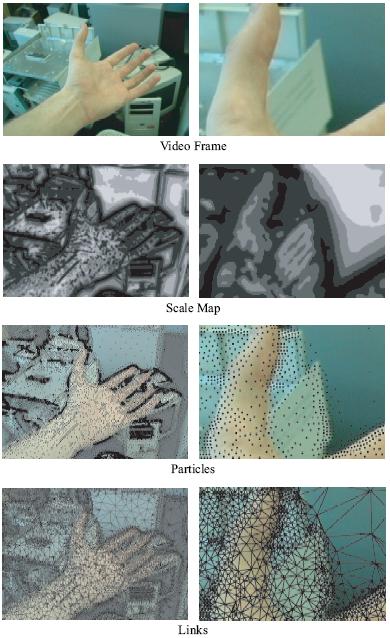

This kind of motion estimation is useful for a variety of applications. Multiple observations of each scene point can be combined for super-resolution, noise removal, segmentation, and increased effective dynamic range. The correspondences can also improve space-time manipulations, such as frame interpolation for slow motion and stabilization. Long-range motion estimation can also facilitate operations that require specifying time-varying video regions, such as matting, rotoscoping, and painting onto the scene. Particle Video ApproachOur approach [1] represents video motion using a set of particle trajectories. Each particle denotes an interpolated point sample of the image, in contrast to a feature patch that represents a neighborhood of pixels. Particle density is adaptive, so that the algorithm can model detailed motion with substantially fewer particles than pixels. The algorithm optimizes particle trajectories using an objective function that incorporates point-based image matching, inter-particle distortion, and frame-to-frame optical flow. The algorithm also extends and truncates particle trajectories to handle complex behavior near occlusions. Our contributions include posing the particle video problem, defining the particle video representation, and presenting an algorithm for building particle videos. We provide a new motion optimization scheme that combines variational techniques with an adaptive-density motion representation. The algorithm uses weighted links between particles to implicitly represent grouping, which provides an alternative to discrete layer-based representations. The particle representation provides long-range trajectory information that is not generally used or provided by existing optical flow algorithms.

Design GoalsOur primary design goal is the ability to model complex motion and occlusion. We want the algorithm to work on general video, which may include close-ups of people talking, multiple independently moving objects, textureless regions, narrow fields of view, and very complicated geometry (e.g. trees). A particle approach provides this kind of flexibility. Particles can represent complicated geometry and motion because they are small; a particle's appearance will not change as rapidly as a large feature patch, and it is less likely to straddle an occlusion boundary. Particles represent motion in a non-parametric manner; they do not assume that the scene consists of a set of planar or rigid components. Of course, a flexible system needs constraints, which motivates another design decision: consistency is more important than correctness. If the scene includes arbitrary deforming objects with inadequate texture, finding the true motion may be hopeless. Typically, this problem is addressed with geometric assumptions about scene rigidity and camera motion. Instead, we simply strive for consistency: that red pixels from one frame are matched with red pixels in another frame. For many applications, this kind of consistency is sufficient (and certainly more useful than would be failure due to a lack of traditional geometric constraints). ConclusionDense long-range video correspondences are useful for a variety of applications. This kind of motion information could improve methods for many existing vision problems in areas ranging from robotics to filmmaking. Our particle representation differs from standard motion representations, such as vector fields, layers, and tracked feature patches. Existing multi-frame optical flow algorithms simultaneously combine multiple frames (often using a temporal smoothness assumption), but they do not enforce long-range consistency of the correspondences. Our algorithm improves frame-to-frame optical flow by enforcing long-range appearance consistency and motion coherence. Our algorithm does have several limitations. Like most motion algorithms, the approach sometimes fails near occlusions. Also, like other motion algorithms, the approach has difficulty with large changes in appearance due to non-Lambertian reflectance and major scale changes. (Our color and gradient channels provide significant robustness to reflectance, but not enough for all cases.) In the future, we will focus on these issues. The limitations arise from the algorithm for positioning particles, not a fundamental limitation of the particle representation. We plan to explore more sophisticated occlusion localization and occlusion interpretation. We will also experiment with allowing slow changes in a particle's appearance over time, to permit larger reflectance and scale changes. Finally, by making our data and results available online, we hope others will explore the particle video problem. References:[1] Peter Sand and Seth Teller. Particle Video: Long-Range Motion Estimation using Point Trajectories. In CVPR, to appear, 2006. |

||||

|