| Research Abstracts Home | CSAIL Digital Archive | Research Activities | CSAIL Home |

![]()

|

Research

Abstracts - 2007

|

A Conditional Hidden Variable Model for Gesture SalienceJacob Eisenstein, Regina Barzilay & Randall DavisNon-verbal modalities such as gesture can contribute to the understanding

of natural language by both humans and machines. For example, a repeated

pointing gesture suggests a semantic correspondence between the accompanying

verbal utterances. Without the gesture, the speaker's meaning may be inaccessible

to machine or even human listeners. Yet not all hand movements are meaningful

gestures, and irrelevant hand movements may be confusing. The optimal

solution is to attend to gesture only when it is likely to be useful.

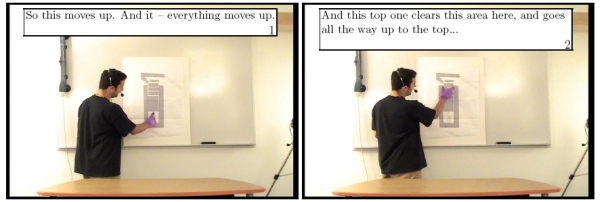

We present MethodOur approach takes the form of a conditionally trained model, with linear weights. However, our potential function also incorporates a hidden variable, governing whether gesture features are included. The hidden variable is associated with a set of "meta-features," which predict the relevance of gesture. For example, effortful hands movements away from the body center are likely to be relevant, while fidgety movements requiring little effort are not. Linguistic features are also relevant -- constructions that may be ambiguous, such as pronouns, are more likely to co-occur with meaningful gesture than fully-specified noun phrases [3]. The model is trained using labeled data for some linguistic task for which gestures are likely to contribute and improve performance. In learning to predict these labels, the system will also learn a model of gesture salience, so that it includes the gesture features only when they are helpful. In our evaluations, we train a system for coreference resolution, using visual features that characterize gestural similarity, since similar gestures may predict coreference. Our model and its training are described in more detail in [2]. ApplicationsConditional modality fusion has been shown to be successful for improving language understanding for both machines and humans. On the NLP side, we have shown that coreference resolution is improved when gesture features are included. Moreover, the contribution of the gesture features is 73% greater when they are combined using conditional modality fusion, as opposed to a naive concatenation of gesture and linguistic feature vectors. This improvement is shown to be statistically significant. In addition, the conditional modality fusion model has useful applications for human language comprehension. One method of summarizing video is to select keyframes that capture critical visual information not included in a textual transcript. An example is shown below:

We select keyframes that are judged by our model to be highly likely to contain an informative gesture. Again, this judgment is based on both linguistic and visual features. To evaluate this method, we compare the selected keyframes with ground truth annotations from a human rater. Keyframes selected from our conditional modality fusion method correspond better with ground truth than keyframes selected unimodally, according to commonly-used linguistic or visual features. More detail on this application will be available in a forthcoming publication [1]. Future WorkThe linguistics literature is rich with syntactic models of verbal language, but little is known about how gesture is organized. Thus, the supervised approaches that have been so successful with verbal language are inapplicable to gesture. We consider conditional modality fusion to be a first step towards characterizing the structure of gestural communication using hidden variable models. References:[1] Jacob Eisenstein, Regina Barzilay, and Randall Davis. Extracting Keyframes Using Linguistically Salient Gestures. Forthcoming. [2] Jacob Eisenstein and Randall Davis. Conditional Modality Fusion for Coreference Resolution. Submitted to Association for Computational Linguistics 2007. [3] Alissa Melinger. Gesture and communicative intention of the speaker. Gesture 4(2), pp. 119--141. 2002. |

||||

|