| Research Abstracts Home | CSAIL Digital Archive | Research Activities | CSAIL Home |

![]()

|

Research

Abstracts - 2007

|

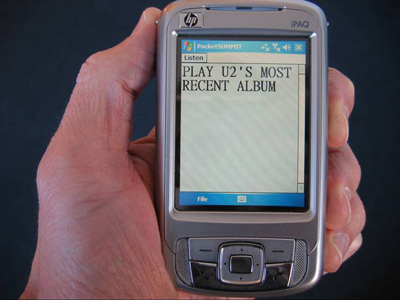

PocketSUMMIT: Speech Recognition for Small DevicesLee Hetherington

IntroductionAs technology improves, portable devices such as PDAs and mobile phones are becoming smaller and more computationally powerful. We wish to do more with these devices, yet the smaller form factor generally makes them more difficult to use. Speech interfaces may be able to harness the computational power and provide a more intuitive interface despite minimal screen and keyboard sizes. Traditionally, the Spoken Language Systems Group's SUMMIT automatic speech recognition system has been developed to support research by providing a flexible framework for incorporating new ideas [1]. It was developed to run on Unix workstations, with significant processing power and available memory, so small footprint was never priority. When confronted with small devices with modest memory and processing resources, it was clear we needed to simplify and rewrite SUMMIT from scratch. The result is PocketSUMMIT. We initially targeted PocketPC and smart phone devices running Windows CE/Mobile, which typically use a 400–600MHz ARM processor, no floating-point hardware, and 32–64MB RAM. The lack of floating-point hardware meant that all significant computation needed to use fixed-point (integer) operations. With limited memory and typically slow memory access speed, we strove to push the footprint of PocketSUMMIT down to a few MB. We already had a fixed-point acoustic front-end that could compute Mel-frequency cepstral coefficients (MFCCs) from a waveform [2]. In our baseline SUMMIT system, that is a small fraction of total computation. Nearly half of total computation is evaluating Gaussian mixture acoustic models, and the other half is performing the search for the optimal word hypotheses. The next two sections will focus on these two significant areas of computation and memory use. We used the Jupiter weather information access domain [3], with a vocabulary of about 2000 words, to benchmark progress. Acoustic ModelingA large fraction of computation in any speech recognition system is consumed evaluating acoustic models. Typically, such models are implemented using hidden Markov models (HMMs), where the distributions for each state are typically modeled using mixtures of diagonal Gaussians. Our baseline SUMMIT system often uses both segmental features (e.g., phoneme duration and MFCC averages over different regions of a phoneme) and diphone landmark features modelling transitions between phonemes [4]. In order to simplify modeling, we chose to initially support only the diphone landmark models, which are each implemented with a two-state HMM. Our landmarks are hypothesized at a variable rate depending on local rate of spectral change, averaging about 30ms between landmarks. We chose to quantize the Gaussian parameters to reduce both memory footprint and speed Gaussian evaluation [5]. If we also quantize feature vectors, Gaussian density evaluation becomes table lookup. While vector quantization (VQ) could be used for a whole feature, mean, or variance vector, or parts thereof of each, we deemed it to require too much computation and space to store all of the VQ centroids or decision boundaries. Scalar quantization (SQ) is simpler and requires fewer parameters. Because our feature vectors are normalized or "whitened" to approximately zero mean and unit variance, we can use the same scalar quantizer across all 50 dimensions. We chose to quantize Gaussian mean components with a 5-bit linear quantizer and variance components with a 3-bit non-linear quantizer, allowing a mean/variance pair to fit in a single byte. By using the same linear quantizer on the feature vector components as used on the mean components, we can make use of a very small 8-bit lookup table to find the log probability density contribution for each of the 50 dimensions. The net result is that evaluating a single mixture component is reduced to summing the results of 50 table lookups. 32-bit operations can load and operate on four dimensions at a time, further speeding up execution. Finally, summing across mixture components involves fixed-point approximations to exp() and log(). How does this perform in terms of memory use, speed, and accuracy? Our baseline models, consisting of 1365 distinct models and 29958 Gaussians, consume 14MB and produce a word error rate (WER) of 9.8%. Speed on a 2.4GHz Xeon processor, utilizing SSE floating-point SIMD instructions is approximately 3 times faster than real-time. In comparison, our quantized models consume 1.6MB, produce a WER of 9.5%, and allow SUMMIT to run 5 times faster than real-time. The quantized models are much smaller, run quite a bit faster (even when compared on fast SSE floating-point hardware), and produce better accuracy. DecodingOur baseline SUMMIT system produces a joint phone/word graph in two passes and uses a third pass to generate N-best lists. In the forward pass pipelined with speech input, it computes the set of active (state, landmark) nodes and their best arrival score using a dynamic programming beam search. A second pass after the speech is complete is performed in the backwards direction and uses an A*-style search to generate a graph containing the most likely hypotheses. Typically, this graph is rescored with a trigram language model using finite-state transducer (FST) composition. The intermediate results produced by the forward pass may be quite large depending on the vocabulary size. In the interests of reduced memory footprint and better speed, in PocketSUMMIT the forward beam search builds a phone/word graph directly. A fixed pool of graph nodes and arcs is used; when it is exhausted, the graph is pruned to free up resources while keeping the most likely hypotheses. The second pass, consisting of an A* search, computes a large N-best list of word sequences, and these word sequences are rescored with a trigram. The new search uses considerably less memory, runs much faster, and produces slightly better accuracy than the baseline SUMMIT search. Besides the acoustic modeling components mentioned previously, the decoder also makes use of a recognition FST modeling context dependencies, phonological rules, pronunciations and bigram language models. This FST is fully determinized and minimized, its weights are quantized to 5 bits, and then it is bit packed (with variable-sized arcs) down to 0.5MB from its original 4.7MB. The trigram language model is similarly compressed with its scores (actually trigram minus bigram scores) quantized to only 3 bits and bit packed to 0.4MB from 1.2MB. The quantization and compression of the recognition FST and the trigram language model do not hurt accuracy, and despite the bit unpacking needed to access them, they further speed up recognition. Presumably, they fit better in processor caches, which more than makes up for the bit unpacking operations. Putting It All TogetherThe model components for our Jupiter weather information domain with vocabulary size of about 2000 words comes to a total of 1.7MB static, memory-mapped files for the acoustic models, the recognition FST, and the trigram. (The acoustic models have been shrunk by another factor of two by using discriminative training based on a minimum classification error (MCE) criterion [6], with slight gains in accuracy.) The total dynamic memory use is approximately 1.5MB, including a minimal GUI for demonstration purposes. Codewise, the entire recognizer fits in a 130kB DLL and consists of about 5,000 lines of C++. PocketSUMMIT runs the Jupiter weather domain at approximately real-time speed on a 400MHz ARM Windows Mobile smart phone. We have also configured PocketSUMMIT using relatively simple context-free grammars (CFGs) for a voice dialing (e.g., "Please call Victor on his cell phone.") as well as a for selecting music out of a personal music device (e.g., "Play Sarah McLachlan's most recent album."). For the latter, we loaded the 330 artists names, 350 album names, and 2600 song titles contained in my iPod into the grammar. This represents nearly 3000 unique words, and yet it runs faster than real-time in under 2.5MB on a 400MHz ARM. We could certainly imagine building this type of speech input capability into small portable music devices. Future WorkIn our initial implementation of PocketSUMMIT, we removed many features of the baseline SUMMIT system. We are at present trying implement some of these in the small-footprint framework. Top among these features are dynamic vocabulary, the ability to alter the vocabulary (or grammar) dynamically based on changing data (e.g., changing list of song titles). This capability can also be used to simulate a large virtual vocabulary with much smaller active vocabularies in a multi-pass approach [7]. Initial experiments incorporating MCE-trained acoustic models have shown some degradation when quantizing them, despite ML-trained models showing no degradation. The relatively large quantization errors are likely disturbing the fine adjustments in decision boundaries produced by MCE training, and thus it may be worthwhile investigating a joint optimization of model parameters and of quantization parameters. At the very least, the MCE discriminative training could be performed in the quantized parameter space. AcknowledgmentsThis research was sponsored by the T-Party Project, a joint research program between MIT and Quanta Computer Inc., Taiwan. Paul Hsu was instrumental in putting together the Windows CE/Mobile graphical user interface and audio capture application used to demonstrate the PocketSUMMIT speech recognition engine. References:[1] L. Hetherington and M. McCandless. SAPPHIRE: An extensible speech analysis and recognition tool based on Tcl/Tk. In Proc. ICSLP, pp. 1942–1945, Philadelphia, Oct. 1996. [2] L. S. Miyakawa, Distributed Speech Recognition within a Segment-Based Framework, S.M. thesis, MIT Department of Electrical Engineering and Computer Science, Jun. 2003. [3] V. Zue, et al. JUPITER: A telephone-based conversational interface for weather information. In IEEE Trans. on Speech and Audio Processing, Vol. 8 , No. 1, Jan. 2000. [4] J. Glass. A probabilistic framework for segment-based speech recognition. In Computer Speech and Language:17, pp. 137–152, 2003. [5] M. Vasilache. Speech recognition using HMMs with quantized parameters. In Proc. ICSLP, pp. 441–444, Beijing, Oct. 2000. [6] E. McDermott and T. Hazen. Minimum classification error training of landmark models for real-time continuous speech recognition. In Proc. ICASSP, pp. 937–940, Montreal, May 2004. [7] I. L. Hetherington. A multi-pass, dynamic-vocabulary approach to real-time, large-vocabulary speech recognition. In Proc. Interspeech, pp. 545–548, Lisbon, Sep. 2005. |

||||

|