| Research Abstracts Home | CSAIL Digital Archive | Research Activities | CSAIL Home |

![]()

|

Research

Abstracts - 2007

|

Spoken Dialogue Interaction with Mobile DevicesStephanie Seneff, Mark Adler, Chao Wang & Tao WuIntroductionThe mobile phone is rapidly transforming itself from a telecommunication device into a multi-faceted information manager that supports both communication among people as well as the manipulation of an increasingly diverse set of data types stored both locally and remotely. As the devices continue to shrink in size yet expand their capabilities, the conventional GUI model will become increasingly cumbersome to use. What is needed therefore is a new generation of user interface architecture designed for the mobile information device of the future. Speech and language technologies, perhaps in concert with pen-based gesture and conventional GUI, will allow users to communicate via one of the most natural, flexible, and efficient modalities that ever existed - their own voice. A voice-based interface will work seamlessly with small devices, and will allow users to easily invoke local applications or access remote information. In this paper, we describe a calendar management application to demonstrate our vision for intuitive interfaces that enable humans to communicate with their mobile phones via natural spoken dialogue. For this vision to be realized, many technology advances must be made: we must go beyond speech recognition/synthesis, and include language understanding/generation and dialogue modeling. Another factor critical to the success of the system is the use of contextual information, not only to solve the tasks presented to the system by the user, but also to enhance the system performance in the communication task. In the following sections, we will first describe the calendar application. We then discuss contextual influences that our application can exploit for improved user experience. We conclude with a summary and a discussion of our future plans. Calendar ApplicationThe calendar application enables a user to manage the calendar on mobile phones via verbal interactions in English or Chinese. A user can query, add, delete, or change calendar entries by speaking with a dialogue system. The system can engage in sub-dialogues with the user to resolve ambiguities or conflicts, or to obtain confirmations. The system capabilities are best illustrated via an example dialogue, as shown in Figure 1.

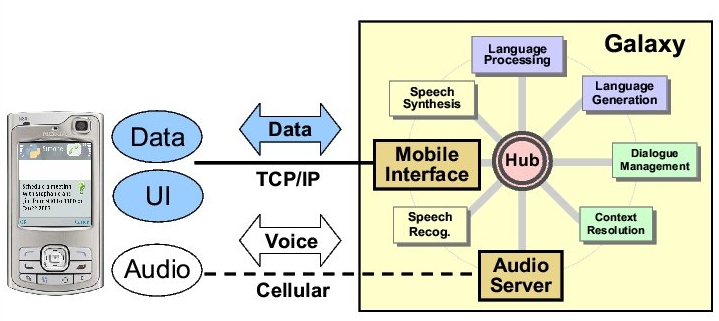

Figure 1: Example spoken dialogue between a user and the calendar management system. Figure 2 shows the architecture of the system, which consists of two components: the Galaxy system [1] and a mobile device. The Galaxy system runs on a remote server, where the various technology components communicate with one another via a central hub. The mobile phone utilizes the voice channel for speech I/O and the data channel to transmit information needed by the Galaxy system to interpret queries. The user interacts with the client application on the device (i.e., the calendar application), primarily via spoken conversation. Speech is transmitted full duplex over the standard phone line. Application data, such as calendar entries stored on the phone or responses from Galaxy, are transmitted via the data channel.  Figure 2: System architecture. The calendar application is built on human language technologies developed at MIT. Speech recognition is performed by the Summit landmark-based system using finite state transducer technology [2]. We utilize the Tina natural language understanding system [3] to select the appropriate recognizer hypothesis and interpret the user's query. A context free grammar based on syntactic structure parses each query, and transforms it into a hierarchical meaning representation that is passed to the discourse module [4], which augments the meaning representation with discourse history. A generic dialogue manager [5] processes the interpreted query according to an ordered set of rules, and prepares a response frame encoding the system's reply. Domain-specific information is provided to the dialogue manager in an external configuration file. The response frame produced by the dialogue manager is converted into a response string via the {\sc Genesis} language generation system [6,7]. Finally, a speech synthesizer prepares a response waveform which is shipped across the standard audio channel for the system's turn in the dialogue. In addition, a graphical display illustrates changes made to the calendar at the point where a proposed change to the calendar is being confirmed. We have developed two versions of this system, one supporting interaction in English, and the other one in Mandarin Chinese. Contextual InfluencesWith today's mobile devices, context can include any information about the environment/situation that is relevant to the interaction between a user and an application: the person, the location, the time, etc. It has become a shared idea in the pervasive computing community that enabling devices and applications to adapt to the physical and electronic environment would lead to enhanced user experience. A mobile phone is usually a personal device carried by a single user. One of the enormous advantages of working with a system that is intended to serve only one user is the opportunity this offers to personalize the system to that particular user. Hence, we can expect to be able to monitor many aspects of their behavior/data and adjust system parameters to better reflect their needs. There exists already an extensive body of research on the topic of speaker adaptation of the acoustic models of a speech recognizer. Beyond acoustic models, we should also be able to train the language models to favor patterns the user has grown accustomed to. Personal data that are relevant to the application domain can also be used to help in the speech recognition task. For example, it would be logical for a calendar application to assume that people already in the user's address book are likely candidates for a future meeting. Thus, the recognizer should automatically augment its name vocabulary to include everyone in the address book. This can be further extended to include external sources as well, either by connecting to a corporate directory, or in a meeting environment to include the attendees, since the meeting could provide context for future meetings. A further variation would be to extend this another level to include all the attendees' address book information as well, depending on access policies. Another interesting issue for calendar management is the reference time zone, which would naturally be omitted in conversation. If the user is on vacation in Paris, should it assume they will be making references to time relative to Paris' time zone, or should it perhaps instead prompt them for clarification of their intended time zone at the onset of a conversation? In global corporations with video and voice conference calls, time zone clarification is essential, and usually specified. However, with global travel, should the system assume that the time is local to the location of the user at the time of the meeting? In that case, the user's travel itinerary provides essential information for scheduling. Summary and Future WorkIn this paper, we described a calendar management system to exemplify the use of a speech interface as a natural means to control and communicate with mobile devices. We highlighted the many ways in which contextual information can be used to improve the effectiveness of spoken dialogue for mobile applications. Although a remote server-based solution remains a reasonable approach, local processing is preferable in many circumstances. Thus, in addition to continuing refining our application, we are migrating from a server-based system towards a model where speech components reside on the mobile device. Our initial platform is the Nokia Linux Internet tablet, the N800, which provides a larger, touch sensitive screen. This will allow us to incorporate local context in a more straightforward manner (eliminating the requirement of connectivity to a remote server). We will initially focus on the speech recognizer and the Text-to-Speech (TTS) components, concentrating on two languages, Mandarin and English. AcknowledgementsThis research was supported by Nokia as part of the Nokia-MIT project. References:[1] S. Seneff, E. Hurley, R. Lau, C. Pao, P. Schmid, and V. Zue, Galaxy-II: A reference architecture for conversational system development. In The Proceedings of ICSLP, pp. 931--934, Sydney, Australia, December, 1998. [2] J. Glass, A probabilistic framework for segment-based speech recognition. In Computer Speech and Language, 17:137-152, 2003. [3] S. Seneff, Tina: A natural language system for spoken language applications. In Computational Linguistics, 18(1):61--86, 1992. [4] E. Filisko and S. Seneff, A Context Resolution Server for the Galaxy Conversational Systems. In Proceedings of EUROSPEEECH, pp. 197--200, Geneva, Switzerland, 2003. [5] J. Polifroni and G. Chung, Promoting portability in dialogue management. In Proceedings of ICSLP, pp. 2721--2724, Denver, Colorado, 2002. [6] L. Baptist and S. Seneff, Genesis-II: A versatile system for language generation in conversational system applications. In Proceedings of ICSLP, V.III, pp. 271--274, Beijing, China, 2000. [7] B. Cowan, PLUTO: A preprocessor for multilingual spoken language generation. S.M. thesis, MIT Department of Electrical Engineering and Computer Science, February, 2004. |

||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

|