| Research Abstracts Home | CSAIL Digital Archive | Research Activities | CSAIL Home |

![]()

|

Research

Abstracts - 2007

|

Unsupervised Activity Perception by Hierarchical Bayesian ModelsXiaogang Wang, Xiaoxu Ma & Eric GrimsonIntroductionThe goal of this work is to understand activities and interactions in a complicated scene, e.g. a crowded traffic scene (see Figure 1), a busy train station or a shopping mall. In such scenes individual objects are often not easily tracked because of frequent occlusions among objects, and many different types of activities often occur simultaneously. Nonetheless, we expect a visual surveillance system to: (1) find typical single-agent activities (e.g. car makes a U-turn) and multi-agent interactions (e.g. vehicles stop waiting for pedestrians to cross the street) in this scene, and provide a summary; (2) label short video clips in a long sequence by interaction, and localize different activities involved in an interaction; (3) show abnormal activities, e.g. pedestrians cross the road outside the crosswalk; and abnormal interactions, e.g. jay-walking (people cross the road while vehicles pass by); (4) support queries about an interaction that has not yet been discovered by the system. Ideally, a sysmte would learn models of the scene to answer such questions in an unsupervised way.

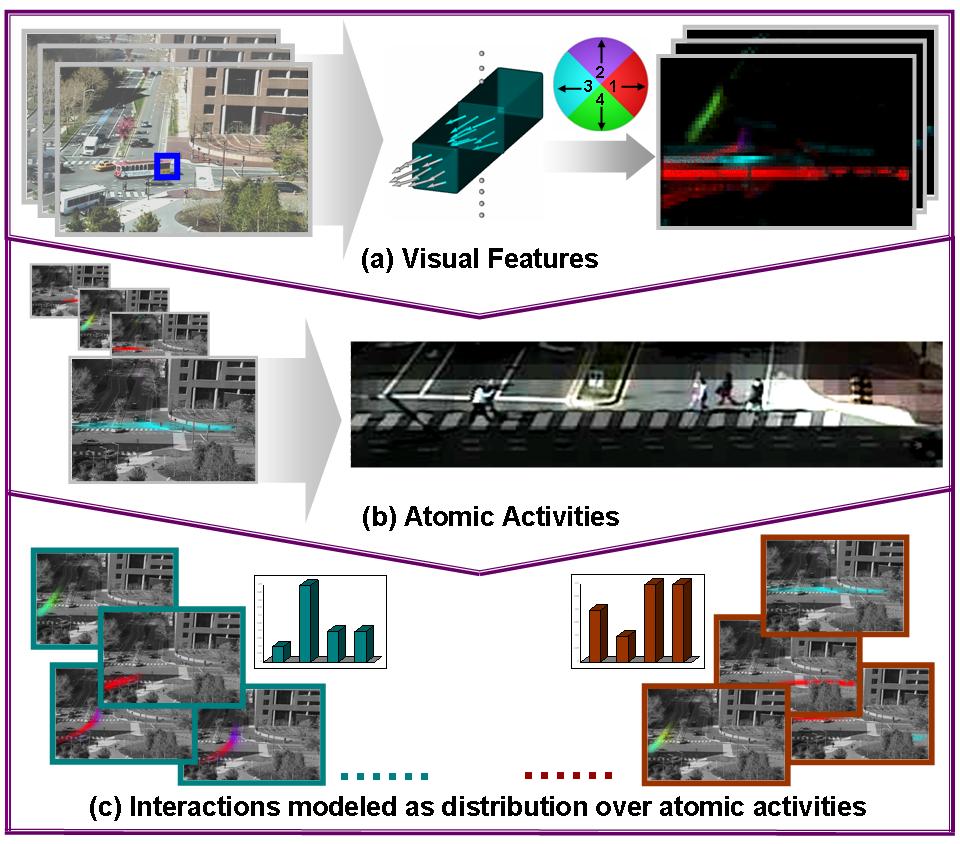

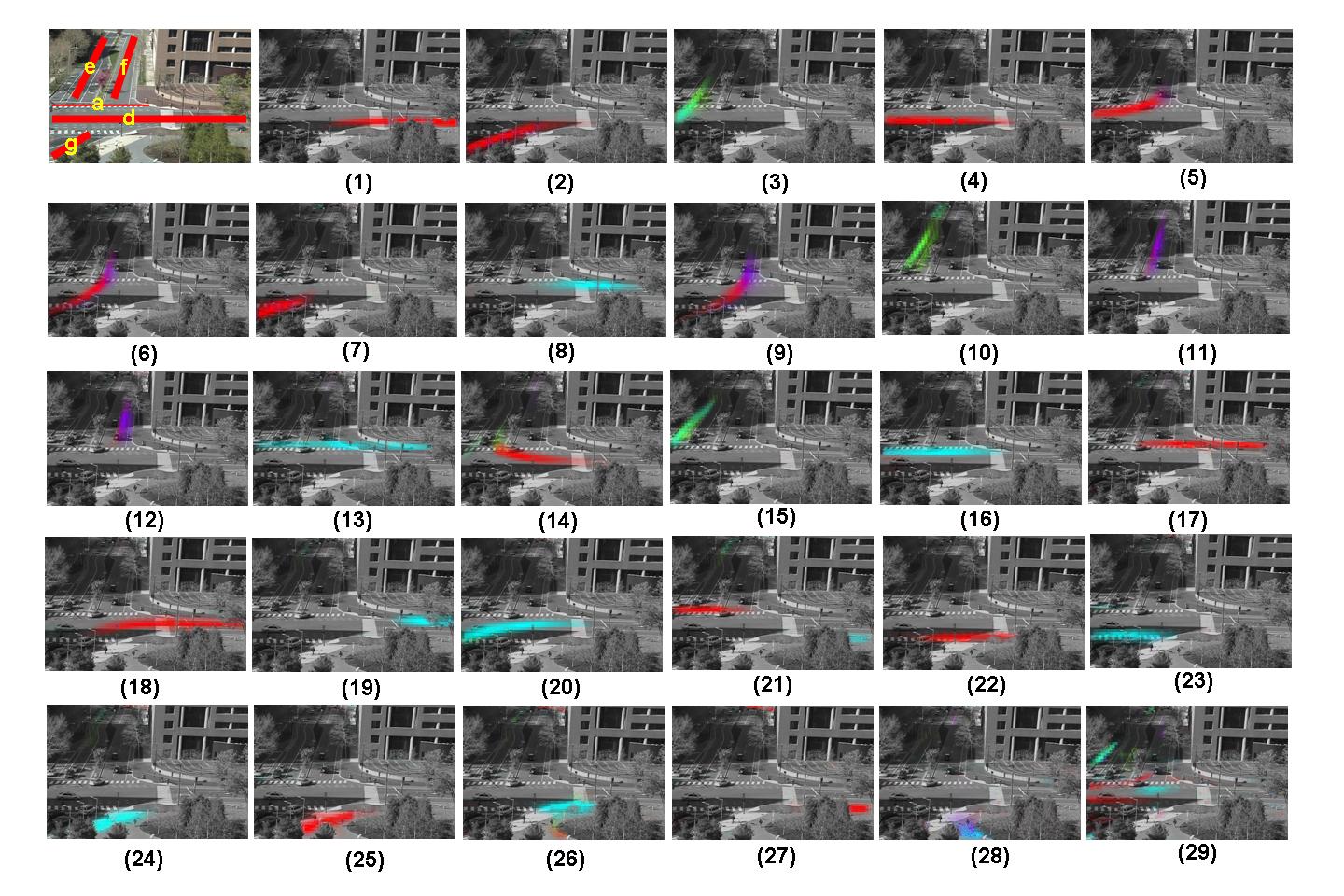

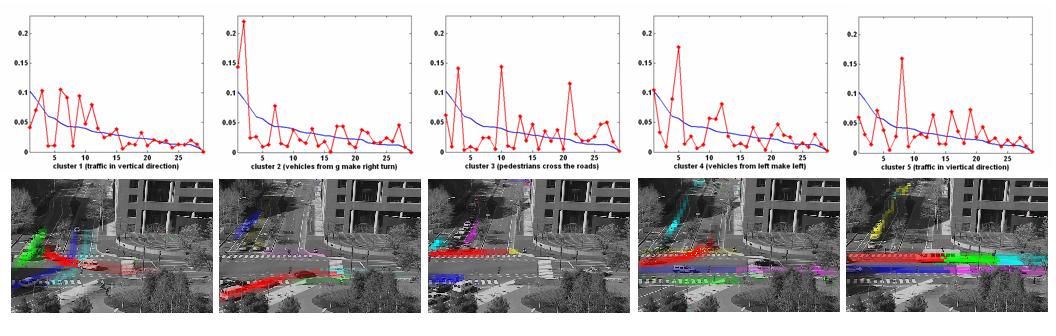

Figure 1. System diagram. Our ApproachTo answer these challenges for visual surveillance systems, we must determine: how to compute low-level visual features, and how to model activities and interactions. Our approach is shown in Figure 1. We compute local motion, which are moving pixels indexed by discretized location and direction, as our low-level visual features, avoiding difficult tracking problems in crowded scenes. We do not adopt global motion features, because in our case multiple activities occur simultaneously and we want to separate singleagent activities from interactions. Word-document analysis is then performed by quantizing local motion into visual words and dividing the long video sequence into short clips as documents. We assume that visual words caused by the same atomic activity often co-exist in video clips (documents) and that interaction is a combination of atomic activities occuring in the same clip. Given this problem structure, we employ a hierarchical Bayesian approach, in which atomic activities are modeled as distributions over low-level visual features, and interactions are modeled as distributions over atomic activities. Under this model, surveillance tasks like clustering video clips and abnormality detection have a nice probabilistic explanation. Because our data is hierarchical, a hierarchical model can have enough parameters to fit the data well while avoiding overfitting problems, since it is able to use a population distribution to structure some dependence into the parameters. A detailed description of this work can be found in [1]. ResultsUsing our hierarchical Bayesian mixture model, 29 atomic activities are discovered in an unsupervised way. Figure 3 plots their distributions over local motions. They reveal some interesting activities in the scene, such as pedestrians cross the road, vehicles stop, vehicles make turns, etc. At the same time, the short video clips are grouped into five clusters, which represent five different interactions: traffic in vertical direction, vehicles from g make rught turn, pedestrians cross the roads, vehicles from left make left turn, and traffic in horizontal direction. Figure 3 plots their distributions over 29 topics.

Figure 2. 29 discovered atomic activities in an unsupervised way, showing their distributions on motion features. The four colors represent four discretized motion directions

Figure 3. Short video clips are grouped into five clustered, which represent five different interactions. In the first row, we plot the interaction distributions over 29 atomic activities. In the second row, we show a vidoe clip as an example for each kind of interactions and mark the motions of the five largest atomic activities in that video clip. References:[1] Xiaogang Wang, Xiaoxu Ma and Eric Grimson. Unsupervised Activity Perception by Hierarchical Bayesian Models. In the Proceedings of International Conference on Computer Vison and Pattern Recognition, 2007. |

||||

|