| Research Abstracts Home | CSAIL Digital Archive | Research Activities | CSAIL Home |

![]()

|

Research

Abstracts - 2007

|

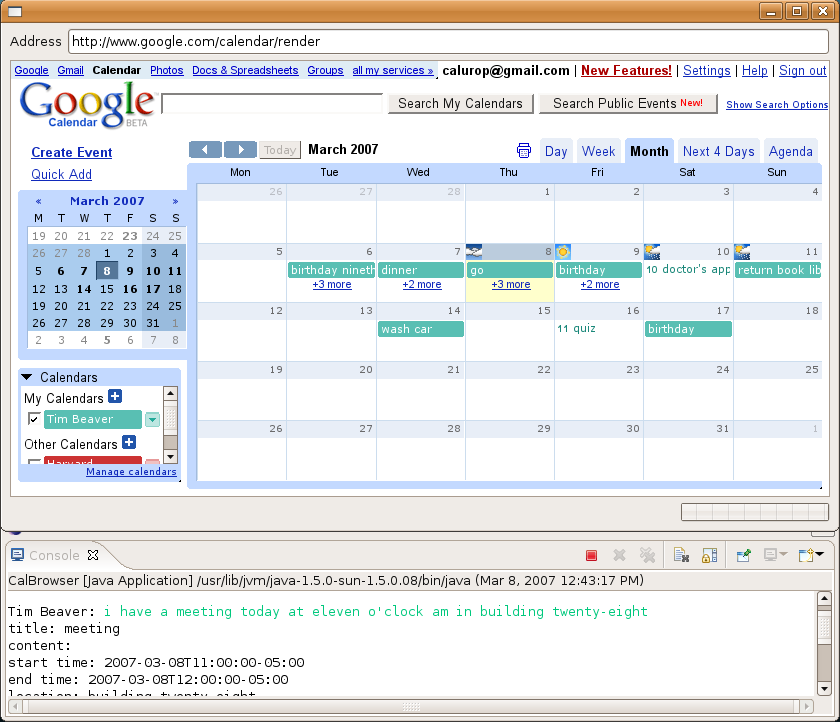

A Prototype Speech CalendarXin Sun & Seth TellerOverviewHuman-computer interaction via speech will become ubiquitous in the near future. After all, humans would like to communicate with machines the same way we naturally communicate with each other – by speaking. “A Prototype Speech Calendar,” started during fall 2006, has been conducted in the Robotics, Vision, and Sensor Networks Group (RVSN) at CSAIL under the supervision of Professor Seth Teller. The project focuses on the home domain and attempts to develop a calendar that family members could interact with via speech. The project entails implementing a conversational, natural language front end to Google Calendar. Natural language technologies have been developed at the Spoken Language Systems Lab (SLS), and this project specifically interfaces with the Summit speech server for voice recognition. DetailsIdeally, the calendar should function as follows:

Necessary actions include displaying, adding, editing, and deleting calendar events. The user should be able to load and save calendar contents as well as searching for specific events. Eventually, the calendar will also have more advanced functionalities, such as alerting users and synchronizing with mobile devices. A key goal of this project is continuous speech monitoring. For instance, the user should not need to actively push a button before giving a command. In addition, the calendar should be able to distinguish the speech of different users and access their individual data accordingly. The back-end framework should be optimized in hopes that a tablet PC might be capable of running this project in a standalone fashion.

Figure: screenshot of current development with the GUI on top and the console transcript on the bottom. |

||||

|