Recovering Intrinsic Images with Gaussian Conditional Random Fields

Marshall F. Tappen, William T. Freeman & Edward H. Adelson

What

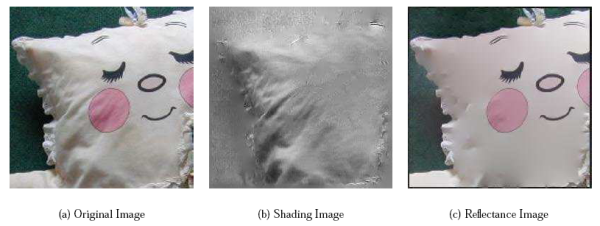

The intrinsic image decomposition [1] represents a scene as a collection of images. Each image describes on intrinsic characteristic of the scene. In this work, we have focused on intrinsic images representing the shading and reflectance of the scene. Figure 1 shows an example of how an image should be decomposed into intrinsic images. The shading image, shown in Figure 1(b), represents the interaction between the shape of the surface and the illumination of the scene. The reflectance image, shown in Figure 1(c), represents the proportion of light that is reflected from the surface. Our goal is to decompose a single, real-world image into shading and reflectance images.

|

Why

Every natural image is the combination of the characteristics of the scene in the image. Understanding the contents of an image requires some method of reasoning about these characteristics and how they affect the final image. One of the fundamental difficulties of vision is that these characteristics are mixed together in the observed image. Before any high-level tasks can be accomplished, the effects of these characteristics must be distinguished. For example, to recover the shape of the surface in Figure 1(a), the shading of the surface must be distinguished from the four squares that have been painted on the surface.

The intrinsic image decomposition facilitates analysis of the scene in an image by separating out the important intrinsic characteristics of the scene into distinct images. This is useful because images are a general representation of the scene which can easily be incorporated into higher-level analysis.

Progress

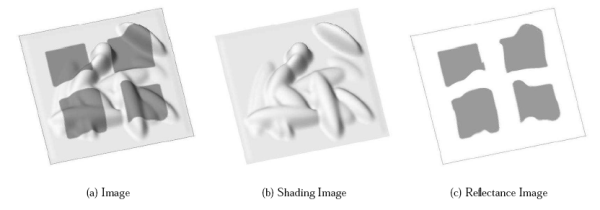

In our previous work [2], the shading and reflectance intrinsic images are found by classifying each image derivative as either being caused by shading or a reflectance change. We found this to work well on real-world images. Figure 2 shows an example of the output of our system. The face and cheek patches that have been painted on the pillow are correctly placed in the reflectance image, while the ripples in the pillow are placed in the shading image.

This system is limited in two ways. First, while the classifiers are trained to differentiate between shading and reflectance changes, the system does not use training examples to determine the quality of the shading and reflectance images that it is producing. Second, the system is limited to working with the classifications of derivatives. This fundamentally limits the system to working with one particular relationship between pixels.

To address these limitations, we are developing a probabilistic model of reflectance images. This model of albedo images is constructed by forcing the reflectance image to conform to a number of local, linear constraints that have been derived from the input image. The advantage of this model is that we can now train the system to produce reflectance images that match ground truth reflectance images. In addition, this model can also express a number of different relationships between pixels.

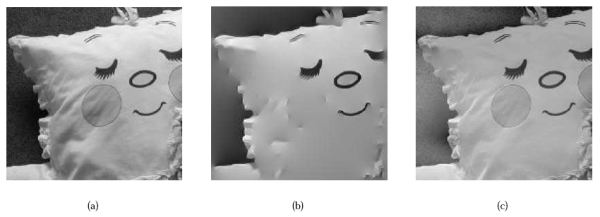

In order to use this model, the local, linear constraints that make up the model must be chosen. We have created an algorithm that uses training data to learn these constraints. In practice, we have found that the reflectance images produced by the new method typically contain much of the reflectance content that is missed by our old system. Figure 3 demonstrates this on the pillow image from Figure 2. Using only gray-scale, the old system, demonstrated in Figure 3(b), fails to detect the cheek patches, while the new system, demonstrated in Figure 3(c), correctly recovers the cheek patches.

|

Future

Training our system to produce good reflectance images currently involves a number of computationally expensive steps. We are currently researching methods for reducing the computation needed to learn the best model of reflectance images. We are also collecting a set of reflectance images taekn from real-world images. These images will enable us to train our model on real images.

Research Support

This work was supported by an NDSEG fellowship to MFT, by a grant from the Nippon Telegraph and Telephone Corporation as part of the NTT/MIT Collaboration Agreement, and by a grant from Shell Oil.

References

[1] H. G. Barrow and J. M. Tenenbaum. Recovering intrinsic scene characteristics from images. In A. Hanson and E. Riseman, editors, Computer Vision Systems, pages 3-26. Academic Press, 1978.

[2] M. F. Tappen, W. T. Freeman, and E. H. Adelson. Recovering intrinsic images from a single image. In S. T. S. Becker and K. Obermayer, editors, Advances in Neural Information Processing Systems 15, pages 1343-1350. MIT Press, Cambridge, MA, 2003.

The Stata Center, Building 32 - 32 Vassar Street - Cambridge, MA 02139 - USA tel:+1-617-253-0073 - publications@csail.mit.edu (Note: On July 1, 2003, the AI Lab and LCS merged to form CSAIL.) |