| Technical Reports | Work Products | Research Abstracts | Historical Collections |

![]()

|

Research

Abstracts - 2006

|

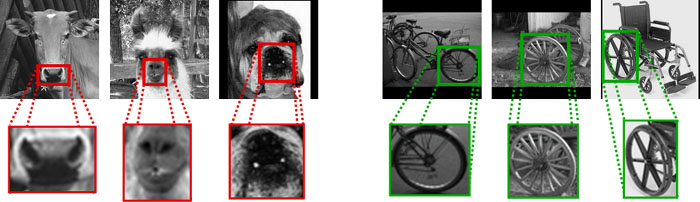

Describing Visual Scenes using Transformed Objects and PartsErik B. Sudderth, Antonio Torralba, William T. Freeman & Alan S. WillskyOverview and MotivationObject recognition systems use the features composing a visual scene to detect and categorize objects in images. We argue that multi-object recognition should consider the relationships between different object categories during the training process. This approach provides several benefits. At the lowest level, significant computational savings are possible if different categories share a common set of features. More importantly, jointly trained recognition systems can use similarities between object categories to their advantage by learning features which lead to better generalization. This transfer of knowledge is particularly important when few training examples are available, or when unsupervised discovery of new objects is desired. Furthermore, effective use of contextual knowledge can often improve performance in complex, natural scenes. We have developed a family of hierarchical generative models for objects, the parts composing them, and the scenes surrounding them [1-3]. Our models share information between object categories in three distinct ways. First, parts define distributions over a common low-level feature vocabularly, leading to computational savings when analyzing new images. In addition, and more unusually, objects are defined using a common set of parts. This structure leads to the discovery of parts with interesting semantic interpretations, and can improve performance when few training examples are available. Finally, object appearance information is shared between the many scenes in which that object is found. Our hierarchical models are adapted from topic models originally proposed for the analysis of text documents [4,5]. These models make the so-called "bag of words" assumption, in which raw documents are converted to word counts, and sentence structure is ignored. While it is possible to develop corresponding "bag of features" models for images [6], this neglects valuable information and reduces recognition performance. To consistently account for spatial structure, we augment hierarchies with transformation [7-8] variables describing the location of objects in particular images. We then use the Dirichlet process [5], a flexible tool from the nonparametric statistics literature, to allow uncertainty in the number of objects in each scene, and parts composing each object. This approach leads to robust models which have few parameters, and learning algorithms which can exploit heterogeneous, partially labeled datasets like those available from the LabelMe online database. Object Categorization with Shared PartsAs motivated by the images below, globally distinct object categories are often locally similar in appearance. We have developed probabilitic models which exploit this phenomenon by learning parts which are shared among multiple categories [1,3]. These shared parts allow information to be transferred among objects, and improve generalization when learning from few examples. Importantly, Dirichlet processes allow the number of parts, and the degree to which they are shared among objects, to be automatically determined.

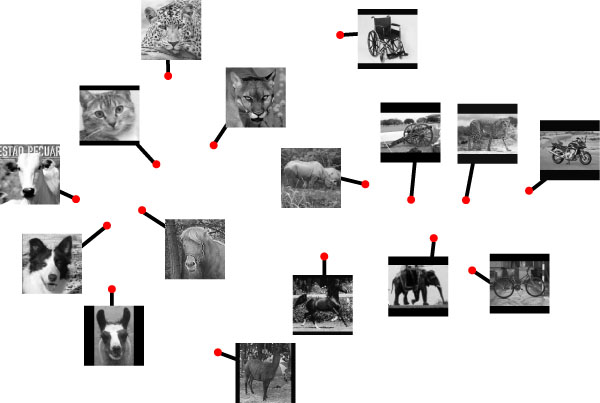

We tested our method on a dataset containing 16 visual categories: 7 animal faces, 5 animal profiles, and 4 wheeled objects. Given training images labeled only by these categories, our Monte Carlo learning algorithms automatically discovered these coarse groupings, and used them to define shared parts. The image below shows a 2D visualization of the learned model, in which distance roughly corresponds to the degree to which categories share parts. In addition to providing a semantically interesting representation, shared parts lead to improved recognition performance when learning from few training examples (for details, see [1,3]).

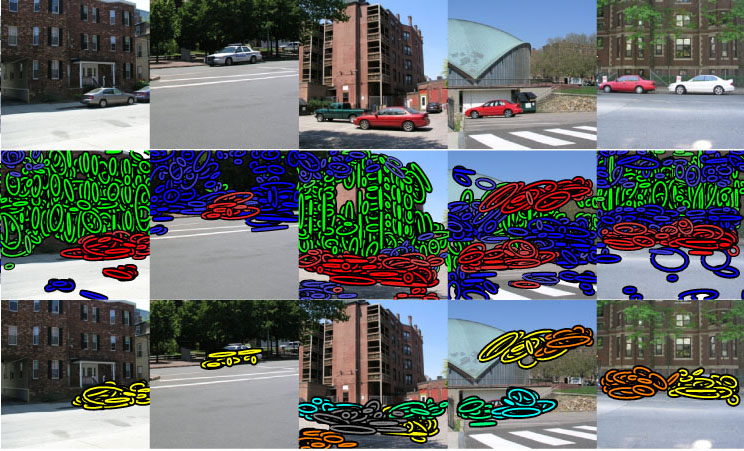

Interpreting Scenes with Multiple ObjectsIn multiple object scenes, we use spatial transformations to capture the positions of objects relative to the camera. This leads to a Transformed Dirichlet process model [2,3] which allows the number of objects to be automatically determined. The top row of the image below shows five street scenes used to test this method. The second row shows automatic segmentations of low-level features (ellipses) into three categories: cars (red), buildings (green), and roads (blue). The bottom row colors features according to different instances of the car category underlying the given scene interpretation. For this segmentation task, our approach improves substantially on simpler bag of features models [1-3].

AcknowledgmentsFunding provided by the National Geospatial-Intelligence Agency NEGI-1582-04-0004, the National Science Foundation NSF-IIS-0413232, the ARDA VACE program, and a grant from BAE Systems. References[1] E. B. Sudderth, A. Torralba, W. T. Freeman, and A. S. Willsky. Learning Hierarchical Models of Scenes, Objects, and Parts. In International Conf. on Computer Vision, Oct. 2005. [2] E. B. Sudderth, A. Torralba, W. T. Freeman, and A. S. Willsky. Describing Visual Scenes using Transformed Dirichlet Processes. In Neural Information Processing Systems, Dec. 2005. [3] E. B. Sudderth, A. Torralba, W. T. Freeman, and A. S. Willsky. Describing Visual Scenes using Transformed Objects and Parts. Submitted to Int. Journal of Computer Vision, Sept. 2005. [4] D. M. Blei, A. Y. Ng, and M. I. Jordan. Latent Dirichlet Allocation. Journal of Machine Learning Research 3, pp. 993-1022, 2003. [5] Y. W. Teh, M. I. Jordan, M. J. Beal, and D. M. Blei. Hierarchical Dirichlet processes. To appear in Journal of American Stat. Assoc., 2006. [6] J. Sivic, B. C. Russell, A. A. Efros, A. Zisserman, and W. T. Freeman. Discovering objects and their location in images. In International Conf. on Computer Vision, Oct. 2005. [7] E. G. Miller, N. E. Matsakis, and P. A. Viola. Learning from One Example Through Shared Densities on Transforms. In IEEE Conf. on Computer Vision and Pattern Recognition, 2000. [8] N. Jojic and B. J. Frey. Learning Flexible Sprites in Video Layers. In IEEE Conf. on Computer Vision and Pattern Recognition, 2001. |

||||

|